Difference between revisions of "Certified HTTP"

Which Linden (talk | contribs) (→Retry: More commentary on 408) |

|||

| Line 140: | Line 140: | ||

=== X-Message-URL === | === X-Message-URL === | ||

This will be a unique url on the server for the client message id which the client will DELETE upon receipt of the entire response to the request. This will only exist if there is a non-null response body or the server is consuming significant resources to maintain that url. When the client performs the delete, the server can flush all persisted resources other than the fact that the message was sent at some point. | This will be a unique url on the server for the client message id which the client will DELETE upon receipt of the entire response to the request. This will only exist if there is a non-null response body or the server is consuming significant resources to maintain that url. When the client performs the delete, the server can flush all persisted resources other than the fact that the message was sent at some point. | ||

== Status Codes == | == Status Codes == | ||

Revision as of 10:19, 2 December 2007

Goals

The basic goal of Certified HTTP (colloquially known as chttp) is to perform exactly-once messaging between two hosts.

From a standard http client perspective, if the client reads a whole response, then it knows for certain the server handled the request. However, for all other failure modes, the client can't be sure if the server did, or did-not perform the request function. On the server side, the server can never know if the client ever got the answer or not. For some operations, we need the ability for the client to perform a data-altering operation and be insistent that it occur. In particular, if it isn't certain that it happened, then it must be able to try again safely.

The bigger picture goal is to make a simple way to conduct reliable delivery and receipt such that general adoption by the wider community is possible. This means that we have to respect HTTP where we can to take advantage of existing tools and methodology and to never contradict common conventions in a REST world.

Join the mailing list.

Workflow

The workflow is a specification/pseudocode for what actions both ends of chttp communication need to take to fulfill the requirements.

Interaction Sequence

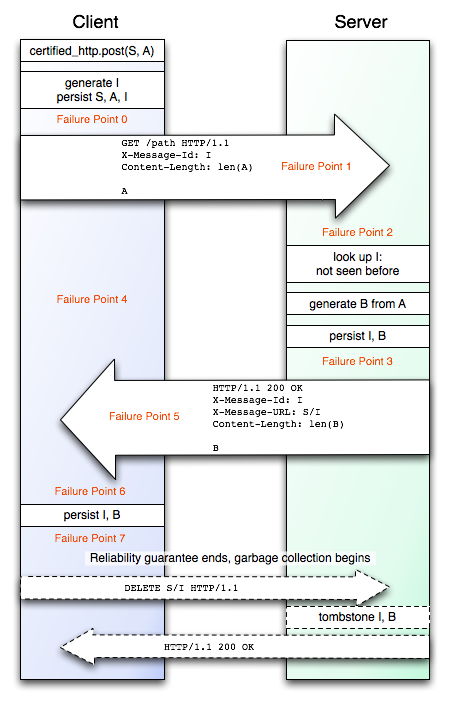

Here's a diagram that describes an everything-works certified http communication.

Of note are the failure points. These are the effective crash locations of the participants (the failure points on the arrows represent communication failures). Actions that intervene between two failure points should be deterministic and/or atomic enough that a crash occurring midway through them is functionally identical to a crash occurring at the immediately prior failure point.

The Certified HTTP Failure Diagrams page (warning: very image-heavy) has a relatively-exhaustive exploration of the way a Certified HTTP implementation should behave in the face of failures.

Sending a Message

The sending-side API looks very much like a normal HTTP method call:

response = certified_http.put(url, body)

What happens under the covers is:

- Generate a globally unique message ID for the message

- Store the outgoing request (headers and all), including the message id, in a durable store "outbox", and waits for a response.

- A potentially asynchronous process performs the following steps:

- Retrieves the request from the outbox.

- Performs the HTTP request specified by the outbox request and waits for a response.

- If a response is not forthcoming, for whatever reason, the process retries after a certain period.

- If the server sends an error code that indicates that the reliable message will never complete (e.g. 501), or a long timeout expires indicating that an absurd amount of time has elapsed, the method throws an exception.

- Opens a transaction on the durable store

- Stores the incoming response in a durable inbox.

- 'Tombstones' the message in the outbox, which essentially marks the message as having been received, so that if the application resumes again, it doesn't resend.

- Closes the transaction on the durable store

- If the response contains a header indicating a confirmation url on the recipient, performs an HTTP DELETE on the resource to ack the incoming message.

There are no explicit semantics for the response body, like HTTP itself. The content will vary depending on the application.

Receiving a Message

The receiver sets up a node in the url hierarchy, just like a regular http node. When an incoming request comes in, the receiver:

- Stores the incoming request in a durable store "inbox", if it doesn't already contain a message with the same ID.

- A potentially asynchronous process performs the following steps:

- Looks for responses in the outbox matching the incoming message id, and if it finds one, sends it as the response without invoking anything else.

- Opens a transaction on the database, locking the inbox request

- Calls the handler method on the receiving node:

def handle_put(body, txn):

return "My Response"- The handler method can use the open transaction to perform actions in the database that are atomic with the receipt of the message. Any non-idempotent operation must be done atomically in this way.

- Stores the return value of the handle method as an outgoing response in the outbox, without closing the transaction.

- Removes the incoming request from the inbox

- Closes the transaction

- Discovers a new item in the outbox, responds to the incoming http request with the response from the outbox, including a url that, if DELETEd, will remove the item from the outbox.

Requirements

We have the concept of a "long" time (LT). The purpose of this time is to ensure that any clients will stop trying a particular request long before the server discards state associated with the request. This is not a very theoretically "pure" concept, since it relies on all parties conforming to certain (reasonable) assumptions, but we believe that we get significant benefits (lack of explicit negotiation), at little risk of actually entering an incorrect state. chttp is expected to generate a small amount of bookkeeping data for each message, and there needs to be a way to expire this data in a way that doesn't involve additional negotiation between servers and clients. The server should keep data for LT, and clients should not retry messages that are older than LT/2 or similar, so that there is at least LT/2 for a Reliable Host to recover from errors, and LT/2 for clock skew. LT therefore should be orders of magnitude longer than the longest downtime we expect to see in the system and the largest clock skew we expect to see. Off the cuff, 30 days seems like a reasonable value for LT.

- chttp is based on http, and can use any facility provided by http 1.1 where not otherwise contradicted.

- this includes the use of https, pipelining, chunked encoding, proxies, redirects, caches, and headers such as Accept, Accept-Encoding, Expect, and Content-Type.

- any normal http verb appropriate to context should be accepted, eg POST, PUT, GET, DELETE

- unless otherwise specified, the http feature set in use is orthogonal and effectively transparent to chttp

- in any complete chttp exchange the client and server can eventually agree on success or failure of delivery, though it is more important that no ill effects arise when they disagree

- any message will be effectively received once and only once or not at all

- the content of the http body must be opaque to chttp

- the URI of the original request must be opaque to chttp

- chttp enabled clients and servers can integrate with unreliable tools

- the chttp server can differentiate reliable requests and respond without reliability guarantees (i.e. act as a normal http server)

- chttp clients can differentiate reliable responses and handle unreliable servers (i.e. act as a normal http client)

- the client will persist the local time of sending

- if there is one the client must either have the persisted outgoing body or the exact same body can be regenerated on the fly

- the server will persist the local time of message receipt

- the server must persist the response body or have a mechanism to idempotently generate the same response to the same request

- all urls with a chttp server behind them are effectively idempotent for all uniquely identified messages

- the client can retry steadily over a period of LT/2 days

- the client and server are assumed to almost always be running

- over that window of opportunity, the same message will always get the same response

- all persisted data on a single host is ACID

requirements on top of http

- if the body of the request is non-zero length, the client MUST include a Content-Length header, unless prohibited by section 4.4 of RFC 2616

- the server will look for \r\n\r\n and content length body to consider the request complete

- an incomplete request will result in 4XX status code

- if the body of the response is non-zero length, the server must include a Content-Length header

- the client will look for \r\n\r\n and content length body to consider the response complete

- the client will retry on an incomplete response

- Messages can not be terminated by closing the connection -- only positive expressions of message termination (such as Content-Length) can guarantee that a full message is received.

assumptions

- The client and server will not have any significant time discontinuity, i.e., the clock difference between them should be less than LT/100.

- The client and server will not have clock drift more than LT/100 per day.

- Both parties exchanging messages are Reliable Hosts (defined below).

- Generating a globally unique message ID is inexpensive.

- It is possible to store a large amount of 'small' data (such as UUIDs, urls, and date/timestamps) for LT. "Large" in this case means "as many items as the throughput of a host implies that you could create during LT". In other words, storing this small data will never be a resource problem.

- Any transaction or data handled by this system will become useless well before LT has elapsed.

- Reliable Host

- A reliable host has the following properties:

- The host has a durable data store of finite size, which can store data in such a way that it is guaranteed to be recoverable even in the face of a certain number of hardware failures.

- The host will not be down forever. Either a clone will be brought up on different hardware, or the machine itself will reappear within a day or so.

- The host can perform

ACID operations on the data it contains.

ACID operations on the data it contains.

Implementation

Requiring an opaque body and URI pretty much requires either negotiating an URL beforehand or adding new headers. Since the former was discarded earlier (reliable delivery after setup), we will focus on adding request and response headers.

This is a suggested implementation:

- chttp enabled servers will behave idempotently with a unique message id on the request

- the server can request acknowledgment from the client if the request consumes server resources

Request

A client will start a reliable message by making an HTTP request to a url on a reliable http server. The url may be known in advance or returned as part of an earlier application protocol exchange. The request must contain two headers on top of the headers required by HTTP/1.1: X-Message-Id and Date.

X-Message-ID

A globally unique id. It must match the regular expression ^[A-Za-z0-9-_:]{30,100}$. This header is required for all Certified HTTP interactions.

The best practice for generating this id is to combine a client host identifier with a cryptographically secure uuid and a sequence number.

Sending a message with the same message id but a a different body has an undefined result.

Sending a message with the same message id but with a different header which implies a different response body, eg, the first request specifies "Accept: text/plain" and the second request specifies "Accept: text/html", the response can be one of:

- The original response.

- A 4xx indicating that the server has detected the incompatability

It is preferable to return the original response rather than the 4xx, but some implementations may not be able to achieve that.

Date

The Date header, as specified in RFC2616. Certified HTTP requires a date header on all requests, which differs somewhat from RFC2616.

Response

Generally, when the client gets the 2XX back from the server, the message has been delivered. If the server has a response body, then the client will need to acknowledge receipt of the entire body. If the entire body is not read, the client can safely resubmit the exact same request. The server will include a message url in the headers if the client specified a message id on request and the body requires persistence by the server.

X-Message-URL

This will be a unique url on the server for the client message id which the client will DELETE upon receipt of the entire response to the request. This will only exist if there is a non-null response body or the server is consuming significant resources to maintain that url. When the client performs the delete, the server can flush all persisted resources other than the fact that the message was sent at some point.

Status Codes

The semantics of Certified HTTP are to retry until the message goes through. HTTP status codes provide one way of determining whether the message made it or not; we've grouped these status codes into 'success' or 'retry'. In many cases they can signify that the client is attempting to send an invalid message, an error in the application logic; these are labeled as 'fail'. There is a third class, where the status code may be ambiguous; e.g. 404, which is often emitted by temporarily-misconfigured webservers, but may also indicate that an incorrect url was chosen.

Success

These status codes, when received, give the client permission to do whatever cleanup it needs to do, and to return to caller, safe in the assumption that the message was delivered.

- 200 OK

- 201 Created

- 203 Non-Authoritative Information

- 204 No Content

- 205 Reset Content

- 206 Partial Content

- 304 Not Modified

Retry

These status codes indicate a temporary failure or misconfiguration on the server side, and therefore the client should retry until it gets something different. All the redirected/proxied messages carry the same message-id.

- 202 Accepted (potentially delaying longer than normal)

- 300 Multiple Choices (follow redirect)

- 302 Found (follow redirect)

- 305 Use Proxy (use proxy)

- 307 Temporary Redirect (follow redirect only if GET)

- 408 Request Timeout (retry with a new message-id and date)

- 413 Request Entity Too Large (if Retry-After header present)

- 502 Bad Gateway

- 503 Service Unavailable

- 504 Gateway Timeout

Fail

If your application logic screws up and picks an improper content-type or messes up the url, then the Certified HTTP subsystem shouldn't be burdened with retrying forever for a message that will never be delivered. If it receives these status codes, it will raise an exception to the caller and nuke the outgoing request.

- 400 Bad Request

- 401 Unauthorized

- 402 Payment Required

- 403 Forbidden

- 410 Gone

- 411 Length Required

- 413 Request Entity Too Large (if no Retry-After header present)

- 414 Request-URI Too Long

- 415 Unsupported Media Type

- 416 Requested Range Not Satisfiable

- 417 Expectation Failed

- 501 Not Implemented

- 505 HTTP Version Not Supported

Ambiguous

It's a little ambiguous what to do when the client gets one of these status codes, so we think that the application should decide. There are a few ways to do that:

- The client raises an exception with a retry() method that can be caught by the application, and retried if the application determines that the exception represented a temporary failure.

- The application passes a list to the Certified HTTP subsystem that sorts these status codes into 'retry' or 'fail'.

- The subsystem uses a heuristic such as retrying for a short period of time then converting to a Fail if still not successful.

Here's the list of status codes:

- 303 See Other (retry will use GET even if original used POST)

- 307 Temporary Redirect (only if POST)

- 404 Not Found

- 406 Not Acceptable

- 407 Proxy Authentication Required

- 409 Conflict

- 412 Precondition Failed

- 500 Internal Server Error

Related Technologies

I believe that all competitors to this fall into one of three categories, specialized message queues, reliable logic tunneled through HTTP, and delivery over HTTP with setup charges.

traditional message queue technology

This includes products like MQ, MSMQ, and ActiveMQ.

These technologies are useful, but provide a number of hurdles:

- No common standards.

- Integrates a new technology with unknown performance characteristics.

- Requires significant operational overhead.

Because of this, we have opted to use HTTP as a foundation of the technology.

reliable application logic in body

This includes technologies like httpr and ws-reliable.

These tend to be thoroughly engineered protocol specifications which regrettably repeat the mistakes of the nearly defunct XMLRPC and the soon to join it SOAP -- namely, treating services as a function call. This is a reasonable approach, and is probably the most obvious to the engineers working on the problem. The most obvious path, which is followed in both of the examples, is to package a traditional message queue body into an HTTP body sent via POST. Treating web services as function calls severely limits the expressive nature of HTTP and should be avoided.

Consumers of web services prefer REST APIs rather than function calls over HTTP. I believe this is because REST is inherently more comprehensible to consumers since all of the data is data the consumer requested. In one telling data point, Amazon has both SOAP and REST interfaces to their web services, and 85% of their usage is of the REST interface[1]. I believe ceding power to the consumer is the only path to make wide adoption possible. In doing so, we must drop the entire concept of burying data the consumer wants inside application data.

reliable delivery after setup

There appear to be a few proposals of this nature around the web and a shining example can be found in httplr.

These are http reliability mechanisms which require a round trip to begin the reliable transfer and then follow through with some permutation of acknowledging the transfer. This setup can be cheap since the setup does not have the same reliability constraints as long as all clients correctly handle 404. Also, some of this overhead can be optimized away by batching the setup requests.

However, a protocol which requires setup for all messaging will always be more expensive than other options.