Difference between revisions of "How to make writing LSL scripts easier"

m (→Editor) |

m (→git) |

||

| Line 1,378: | Line 1,378: | ||

==git== | ==git== | ||

Git isn´t really involved here, other than that the build system and examples described and used in this article --- and the article itself --- is available as a [ | Git isn´t really involved here, other than that the build system and examples described and used in this article --- and the article itself --- is available as a [git://dawn.adminart.net/lsl-repo.git git repository] for your and my convenience. It´s convenient for you because you can easily download it. | ||

It´s convenient for me because git makes it easy to keep the repository up to date. If several people would contribute to what is in the repository, it would be convenient for them as well because git makes it easy to put together the work done by many people on the same thing. | It´s convenient for me because git makes it easy to keep the repository up to date. If several people would contribute to what is in the repository, it would be convenient for them as well because git makes it easy to put together the work done by many people on the same thing. | ||

| Line 1,388: | Line 1,388: | ||

You can also undo changes by going back to previous commits and experiment with variations (by creating so-called branches). Git works for this much better than making backups or copies of your sources. With backups or copies, it doesn´t take long before I can´t remember what a backup or copy was about and what has changed and whether the copy has become obsolete or not. | You can also undo changes by going back to previous commits and experiment with variations (by creating so-called branches). Git works for this much better than making backups or copies of your sources. With backups or copies, it doesn´t take long before I can´t remember what a backup or copy was about and what has changed and whether the copy has become obsolete or not. | ||

These features can make it worthwile to use git even when you don´t share your sources or don´t have several people working on the same project. There is lots of documentation about git, and one place you may want to check out is the [https:// | These features can make it worthwile to use git even when you don´t share your sources or don´t have several people working on the same project. There is lots of documentation about git, and one place you may want to check out is the [https://git-scm.com/doc documentation]. | ||

Emacs supports git out of the box, see [http://alexott.net/en/writings/emacs-vcs/EmacsGit.html here] and [http://www.emacswiki.org/emacs/Git there]. | Emacs supports git out of the box, see [http://alexott.net/en/writings/emacs-vcs/EmacsGit.html here] and [http://www.emacswiki.org/emacs/Git there]. | ||

Latest revision as of 10:19, 30 December 2015

How to make creating LSL scripts easier

This article is about tools you can use to make creating LSL scripts easier. It tries to explain how to combine and how to use them for this very purpose.

One of these tools is a preprocessor called cpp. It comes with GCC. Among the tools mentioned in this article, cpp is the tool which can make the biggest difference to how you write your sources and to how they look.

The idea of using cpp comes from the source of the Open Stargate Network stargates.

I want to thank the developers of these stargates. Not only their stargates are awesome. The idea of using cpp for LSL scripts is also awesome. It has made it so much easier to create LSL scripts that I have made many I otherwise would never have made.

This article is accompanied by a repository. It is recommended that you pull (download) the repository. The repository contains some example sources used with this article, a few perl scripts and a Makefile. They are organised in a directory structure which they are designed to work with. You will have your own build system for LSL scripts when you have pulled this repository.

The repository does not contain basic standard tools like perl, cpp, make or an editor. This article does not attempt to tell you how to install these. You know better how to install software on your computer than I do, and how you install software also depends on which operating system you are using.

I´m using Linux, currently Gentoo.

Documentation is plentiful. You may find different software that does the same or similar things and may work better for you or not.

Editing this Article

This article is written in my favourite editor. When I update the article on the wiki, I edit the whole article, delete everything and paste the new version in. That´s simply the easiest and most efficient way for me.

Unfortunately, this means that your modifications may be lost when I update the article and don´t see them.

Please use the "discussion" page of this article to post contributions or to suggest changes so they can be added to the article.

Repository

It´s probably more fun to be able to try out things yourself while reading this article. If you want to do so, pull the repository. You can run 'git clone git://dawn.adminart.net/lsl-repo.git' to get it.

Once cloned, you have a directory called "lsl-repo". Inside this directory, you have another one called "projects".

You can put the "projects" directory anywhere you like, and you can give it a different name if you want to.

Inside the "projects" directory, you have more directories like this:

.

|-- bin

|-- clean-lsl.sed -> make/clean-lsl.sed

|-- example-1

|-- include

|-- lib

|-- make

|-- memtest

|-- rfedip-reference-implementation

|-- template-directories

`-- your-script

When you rename or move "bin", "include", "lib", "make" or "clean-lsl.sed", your build system will not work anymore (unless you edit some files and make adjustments). Don´t rename or move them.

Do not use names for directories or files that contain special characters or whitespace. Special characters may work, whitespace probably doesn´t. Maybe it works fine; I haven´t tested.

Suppose you want to try out "example-1". Enter the directory "example-1" and run 'make':

[~/src/lsl-repo/projects/example-1] make

generating dependencies: dep/example-1.di

generating dependencies: dep/example-1.d

preprocessing src/example-1.lsl

39 1202 dbg/example-1.i.clean

[~/src/lsl-repo/projects/example-1]

You will find the LSL script as "projects/example-1/bin/example-1.o". A debugging version is "projects/example-1/dbg/example-1.i". When you modify the source ("projects/example-1/src/example-1.lsl") just run make again after saving it, and new versions of the script will be created that replace the old ones.

Some files are generated in the "dep" directory. They are needed by the build system to figure out when a script needs to be rebuilt and whether entries need to be added to a look-up table or not. You can ignore those. A file is generated in the "make" directory. The file is needed to automatically replace scripts with the script editor built into your sl client, which will be described later.

Now you can log into sl with your sl client. Make a box, create a "New Script" in the box and open the "New Script". It shows up in the built-in editor. Delete the script in the built-in editor and copy-and-paste the contents of "projects/example-1/bin/example-1.o" into the built-in editor. Then click on the "Save" button of the built-in editor. (There is a way to do this kind of script-replacement automatically. It will be explained later.)

The "template-directories" is what you copy when you want to write your own source. Copy it with all the files and directories that are in it. Suppose you make a copy of the "template-directories" and name the copy "my-test". You would then put your own source into "my-test/src", like "my-test/src/my-test.lsl".

Of course, you can have several source files in "my-test/src". For all of them, scripts will be created. The "my-test" directory is the project directory for your project "my-test". When you are working on an object that requires several scripts, you´ll probably want to keep them all together in the project directory you made for this object.

If you get an error message when you run 'make', you are probably missing some of the tools the build system uses. Install the missing tools and try again.

Tools you need for the build system

Required tools:

- basename: coreutils

- cpp: GCC

- echo: coreutils

- editor: your favourite editor, Emacs is recommended

- make: Make

- perl: | Perl

- sed: sed

Optional tools:

- astyle: astyle

- etags: Emacs

- pdflatex: TeX Live

- rm: coreutils

- wc: coreutils

You can replace "make/Makefile" with "make/Makefile-no-optional" and try without the optional tools. You can make "echo" optional, but automatically replacing scripts won´t work unless you can find a replacement for "@echo "// =`basename $@`" > $@" in the Makefile. Since perl is required, you could replace "echo" (and "basename" and "sed") with perl scripts. You can probaly make further modifications so you won´t need perl, but what do you do without it?

When you´re messing with Windoze, you may want to check out Cygwin. When you´re stuck with Apple, maybe this repository helps.

However, under those conditions, you might be better off with a minimal Debian installation in a VM and installing needed packages from there. Last time I tried, Debian still let you install a minimal system and didn´t force you to use a GUI version of the installer. Fedoras´ installer sucks, and with archlinux, you need to know what you´re doing.

In case you want to upgrade from either Macos or Windoze, Fedora is a good choice.

Editor

To create scripts, you do need an editor to write your sources. All sl clients I´ve seen have one built in, and it´s the worst editor I´ve ever seen. Fortunately, you don´t need to use it.

The built-in script editor is useful for replacing the scripts you have been working on in your favourite editor and to look at the error messages that may appear when your script doesn´t compile. Don´t use it for anything else.

Not using the buit-in script editor has several advantages like:

- You can use a decent editor!

- You have all your sources safely on your own storage system.

- Your work does not get lost or becomes troublesome to recover when your sl client crashes, when you suddenly get logged out, when "the server experiences difficulties" again, when the object the script is in is being returned to you or otherwise gets "out of range".

- You don´t need to be logged in to work on your sources.

- You can preprocess and postprocess and do whatever else you like with your sources before uploading a script: You can use a build system.

- You can share your sources and work together with others because you can use tools like git and websites which will host your repositories for free. Even when you don´t share your sources, git is a very useful tool to keep track of the changes you're making.

- Your window manager handles the windows of your editor so that you are not limited to the window of your sl client which doesn´t do a great job when it comes to managing windows. You are not limited to a single monitor, and how fast you can move the cursor in the editor does not depend on the FPS-rate of your sl client.

- You can use fonts easier to read for you, simply change the background and foreground colours and switch to the buffer in which you read your emails or write a wiki article like this one ...

- Though it doesn´t crash, a decent editor has an autosave function and allows you to restore files in the unlikely case that this is needed.

- And yes, you can use a decent editor, even your favourite one :)

You can use any editor you like. An editor is essential and one of your most important tools. When your editor sucks, you cannot be productive. Use the best editor you can find.

Emacs

Emacs is my favourite editor. Give it a try, and don´t expect to learn everything about it within a day. It´s enough when you can move the cursor around, type something and load and save files. Over time, you will learn more just by looking up how to do something and trying it. You will find your way of doing what you need and adjust emacs to what you want --- or you don´t and use it as it is. Lots of documentation is available.

By default, the cursor keys move the cursor. Ctrl+xf loads a file into a new buffer and Ctrl+xs saves it. Ctrl+xb lets you switch to another buffer. These are usually written as C-x-f, C-x-s and C-x-b.

Emacs is worthwhile to learn. It´s the only editor you need to learn because you don´t need any others. It has been around since a long time. If you had learned it twenty-five years ago, that would have saved you the time wasted with other editors which nowadays may not even exist anymore.

It doesn´t hurt to give it a serious try.

Syntax Highlighting

Emacs comes with syntax highlighting for many programming languages. It doesn´t come with syntax highlighting for LSL, but LSL syntax highlighting modes for emacs are available.

You can use Emacs_LSL_Mode.

A mofied version of lsl-mode is in the git repository that accompanies this article as "emacs/lsl-mode.el". This version has been modified for use with a file I named "lslstddef.h" ("projects/include/lslstddef.h" in the repository) to give you better syntax highlighting with it.

Tags

Emacs supports so-called tags. Using tags allows you to find the declaration of functions, defines, macros and variables you use in your sources without searching. It doesn´t matter in which file they are.

The build system creates a so-called tags-file for you. Emacs can read it automatically. Esc+. (M-.) lets you enter a tag you want to go to and takes you there.

Git

See this page: A number of version control systems are supported, and git is one of them. Additional packages are available for this --- I haven´t tried any of them yet.

Customizing Emacs

The git repository that accompanies this article has a directory "emacs". It is recommended that you copy this directory to a suitable location like your home directory.

Two files are in this directory, "emacs" and "lsl-mode.el". "lsl-mode.el" provides a syntax highlighting mode for emacs. When you start emacs, it loads a file, usually "~/.emacs", and evaluates its contents. You could say it´s a configuration file.

That´s what the file "emacs" in the "emacs" directory is. You can move this file to "~/.emacs" or take a look at it and add what you need from it to the "~/.emacs" file you may already have when you are already using emacs.

"~/.emacs" contains instructions that make emacs load a file called "~/emacs/lsl-mode.el". You compile this file from within emacs with M-x byte-compile-file. Compiling it creates "~/emacs/lsl-mode.elc". Compiling "~/emacs/lsl-mode.el" is recommended, and once you have compiled it, you need to edit "~/.emacs" and change "(load "~/emacs/lsl-mode.el")" to "(load "~/emacs/lsl-mode.elc")".

Without using lsl-mode.el (or lsl-mode.elc), syntax highlighting for your sources when you create LSL scripts won´t be as nice, so you really want to do this.

Line Numbers

Line numbers have become an anachronism. They were used to create what was called "spaghetti code" with statements like "if(A) then goto 100;". Seriously. There was no other way. It helped to get you to think straight as a programmer. Events are horrible when you think straight, and I don´t like them. They are worse than Makefiles. Seriously. But there is no other way.

The built-in script editor displays line numbers. Emacs can do that, too. There´s a mode for it, called linum-mode. Try M-x linum-mode. After all, line numbers can be very useful for reference.

When you need to add line numbers to the contents of a buffer, look here.

Other Editors

In case you find that you don´t get along with emacs, you may want to check out this page about editors. There is also a page about alternate editors and build systems/IDEs.

Try vi, or vim, instead. You don´t need to try any other editors. Your time is better spent on learning emacs or vi.

Replacing Scripts

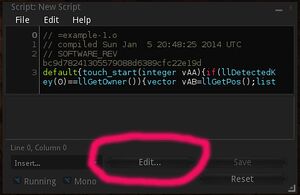

The editor built into sl clients comes with buttons like to save and to reset the script which is being edited. There is also a button labled "Edit". As the label of this button suggests, the button is for editing the script. The built-in editor is not for editing the script, though it´s not impossible to kinda use it for that.

Manually Replacing Scripts

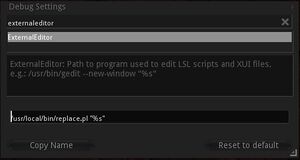

Open a script in your sl client and click on the edit button of the built-in editor. You might get an error message telling you that the debug setting "ExternalEditor" is not set. Don´t panic! Set this debug setting so that your favourite editor is used, and click on the edit button again.

Your favourite editor should now start and let you edit the script. This works because your sl client saves the script which you see in the built-in editor to a file on your storage system. Then your sl client starts whatever you specified in the debug setting as "external editor". To tell your favourite editor which file the script has been saved to, the place holder %s is used with the debug setting "ExternalEditor". The place holder is replaced with the name of the file your sl client has saved.

Once the script has been saved to a file, your sl client monitors this file for changes. When the file changes --- which it does when you edit and then save it with your favourite editor --- your sl client replaces the script in the built-in editor with the contents of the file.

This is nice because you could write a new script while you are offline. When you are ready to test the script, you could log in with your sl client, build a box, create a new script in the box, open the script, click on the "Edit" button, delete the contents of the new script in your favourite editor, insert the script you have written and save it.

Your sl client would notice that the file changed and put it into the built-in editor. It would let the sl server compile the script and show you the error messages, if any. When your script compiles and works right away, you´re done --- if not, you can use the built-in editor to check the error messages and your favourite editor to change the source as needed. Then you need to replace the script again.

At this point, things suddenly aren´t nice anymore: Replacing the script with your favourite editor all the time is a stupid process (unless you program your editor to do it for you). Even when you have only one script, having to replace the script over and over again while you are adding to it and debugging it sucks. It sucks even more when your object has 11 scripts working toegether and a little change makes it necessary to replace all of them.

You could mess around with the built-in editor and try to edit your script further with it, saving the replacing. That also sucks: The built-in editor is unusable, and you end up with at least two versions of the script. Since you want the script safely stored on your storage system and not loose your work when your sl client crashes or the like, you end up replacing the script on your storage system with the version you have in the built-in editor every now and then. When your object has 11 scripts that work together, it eventually becomes a little difficult to keep an overview of what´s going on --- not to mention that you need a huge monitor with a really high resolution (or a magnifying glass with a non-huge monitor with a really high resolution) to fit 11 built-in editor windows in a usable way on your screen to work on your scripts since there is no C-x b with the built-in editor to switch to a different buffer ...

Fortunately, there is a much better and very simple solution: A program which is started by your sl client instead of your favourite editor can replace the script for you.

Automatically Replacing Scripts

A program that replaces the script needs to know which script must be replaced with which file. To let the program know this, a useful comment is put into the script, and a look-up table is used. The comment tells the program the name of the script. The look-up table holds the information which script is to be replaced with which file. The build system puts the comment into the script and creates entries in the look-up table. You just click the "Edit" button to replace the script.

You can easily write a program that replaces files yourself. I wrote one when I got tired of all the replacing. It´s not really a program, but a perl script. It´s written in perl because perl is particularly well suited for the purpose.

You´ll find this script in the git repository that accompanies this article as "projects/bin/replace.pl". It works like this:

- look at the name of the file which is supplied as a command line argument; when it´s not named like "*.lsl", start your favourite editor to edit the file

- otherwise, look at the first line of the file

- when the line starts with "// =", treat the rest of the line from there as a key; otherwise start your favourite editor to edit both the file and the look-up table

- look up the key in the look-up table

- when the key is found in the table, replace the file saved by the sl client with the file the name of which is specified by the value associated with the key

- when the key is not found in the table, start your favourite editor to edit both the file and the look-up table

To make this work, you need to:

- modify the perl script to start your favourite editor (in the unlikely case that your favourite editor isn´t emacs)

- change the debug setting "ExternalEditor" so that your sl client starts the perl script instead of your favourite editor

- make sure that your LSL script starts with a line like "// =example-script.o" --- the build system does this for you

- make sure that the look-up table exists --- the build system does this for you

To modify the perl script to use your favourite editor, edit it and change "use constant EDITOR => "emacsclient";" --- and perhaps "use constant EDITORPARAM => "-c";". There are comments in the script explaining this.

When you have your sl client start the perl script, it is difficult or impossible to see error messages you may get when running the perl script. If there is an error, it will probably seem as if nothing happens when you click on the "Edit" button.

One way to test if it works is to make sure that the look-up table does not exist and then to click on the "Edit" button of the built-in script editor in your sl client. If the look-up table exists, you find the table as "projects/make/replaceassignments.txt". When your favourite editor starts to let you edit both the file your sl client saved and the (empty) look-up table, it is very likely that it´s working.

The build system fills the look-up table for you. It adds entries for new scripts as needed when you run 'make'. It does not delete entries from the table. To make such entries, the perl script "projects/bin/addtotable.pl" in the git repository that accompanies this article is used.

The first entry in the table that matches is used. If you plan to have multiple scripts with the same name, you may need to adjust addtotable.pl, replace.pl and the Makefile.

The build system creates "compressed" versions of the scripts and versions that are not compressed. The "compressed" versions are named *.o and the not-compressed versions are named *.i.

The "compressed" version can be pretty unreadable, which can make debugging difficult. The not-compressed version is formattted with astyle (see below) to make it easier to read. It can be used for debugging. (The "compressed" version is what you want to put as a script into your object.)

To see the debugging version of the script in the built-in editor, change the first line of the script in the built-in editor from like "// =example-script.o" to "// =example-script.i" and click on the "Edit" button. That puts the version of the script which is not compressed into the built-in editor. Switch the "i" back to an "o", click the "Edit" button and the "compressed" version is put back.

A Preprocessor: cpp

The Wikipedia article about preprocessors gives an interesting explanation of what a preprocessor is and provides an overview. For the purpose of making it easier to create LSL scripts, a preprocessor as is used with C (and C++) compilers is a very useful tool.

The particular preprocessor I´m using is called "cpp" and comes with GCC.

What a preprocessor does

A preprocessor enables you:

- to include files into others

- to define so-called "defines" and so-called "macros"

- to create different scripts from the same sources through the use of conditionals

That doesn´t sound like much, yet these are rather powerful features. Some of the advantages that come with these features are:

- You can create libraries and include them into your sources as needed: Your code becomes easily re-usable.

- Your sources become much easier to modify and to maintain because instead of having to change parts of the code in many places, you can change a definition at one single place --- or code in a library or a header file --- and the change will apply everywhere.

- Scripts may become less prone to bugs and errors because code is easy to re-use and easier to modify and to maintain. That can save you a lot of time.

- You may create scripts that use less memory because instead of using variables as constants, you can use so-called defines. This may allow you to do something in one script rather than in two and save you all the overhead and complexity of script-intercommunication.

- Macros can be used instead of functions, which may save memory and can help to break down otherwise unwieldy logic into small, simple pieces which are automatically put together by the preprocessor.

- Using macros can make the code much more readable, especially with LSL since LSL is often unwieldy in that it uses lengthy function names (like llSetLinkPrimitiveParamsFast(), ugh ...) and in that the language is very primitive in that it doesn´t even support arrays, let alone user-definable data structures or pointers or references. It is a scripting language and not a programming language.

- Using macros can make it much easier to create a script because you need to define a macro only once, which means you never again need to figure out how to do what the macro does and just use the macro. The same goes for libraries.

- Frequently used macros can be gathered in a file like "lslstddef.h" which can be included into all your scripts. When you fix a bug in your "lslstddef.h" (or however you call it), the bug is fixed in all your scripts that include it with no need to look through many scripts to fix the very same bug in each of them at many places in their code. The same goes for bugs fixed in a library.

- Macros can be used for frequently written code snippets --- like to iterate over the elements of a list --- and generate several lines of code automatically from a single line in your source. That can save you a lot of typing.

- Conditionals can be used to put code which you have in your source into the resulting LSL script depending on a single setting or on several settings, like debugging messages that can be turned on or off by changing a single digit.

- Using conditionals makes it simple to create libraries and to use only those functions from libraries which are needed by a particular script.

- Macros and defines in your sources only make it into a script when they are used.

There are probably lots of advantages that don´t come to mind at the moment. You can be creative and find uses and advantages I would never think of.

How cpp works

Some extensive documentation about cpp can be found here.

Imagine you want to create a script that figures out which agent on the same parcel is the furthest away from the object the script is in and moves the object towards this agent when the owner of the object touches it.

In the following, the source for the script will be developed piece by piece. You can find the complete source in the git repository that accompanies this article in "projects/example-1/src/example-1.lsl".

Obviously, the script will need a touch event, a way to find out which agent is the furthest away and a a way of moving to the agent.

In this case, keyframed motion (KFM) is a good way to get the object moving: It doesn´t require the object to be physical and thus lets it move through obstacles like walls. KFM requires the object to use the so-called "prim equivalency system", which means that it must either be mesh, or the physics type of one of its prims must be either "convex hull" or "none". This can either be set in the editing window when editing the object, or by the script. The physics type of the root prim cannot be "none", and since it´s hard to say whether "none" or "convex hull" is better for a particular object, the script will simply use "convex hull". Since the physics type of the object needs to be set only once, the script should use a state_entry event to set it. It can be set with llSetLinkPrimitiveParamsFast().

Finding out which agent is the furthest away from the object requires to find out what agents there are. A sensor is unsuited because the agent could be out of sensor range, and the sensor would report no more than the closest sixteen agents. Using llGetAgentList() works much better for this. It is also necessary to find out what the distances between the agents and the object are, for which llGetObjectDetails() can be used.

The object should move to a position at "some distance" from the agent. It seems nicer to use a random distance rather than a fixed one. This can easily be done by creating a "random vector", i. e. a vector the components of which are randomized. What |llFrand() returns is not really a random number, but it´s sufficient for this purpose.

As with all sources, I want to use some of the defines that are gathered in my "lslstddef.h". One of them is the macro "RemotePos()". It is a shortcut for using llGetObjectDetails() to find out the position of an object or prim or agent. Another one is "SLPPF()", a shortcut for llSetLinkPrimitiveParamsFast() because llSetLinkPrimitiveParamsFast() is awfully long.

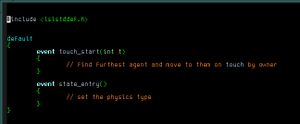

This makes for a source (example-1_A) that looks like this:

#include <lslstddef.h>

default

{

event touch_start(int t)

{

// find furthest agent and move to them on touch by owner

}

event state_entry()

{

// set the physics type

}

}

The '#include' in the first line is a so-called preprocessor directive. It tells the preprocessor to do something. There are other directives, like "#define", "#undef", "#if", "#endif" and some others.

"#include" tells cpp to find a file "lslstddef.h" within some directories it searches through by default and to make it so as if the contents of this file were part of the source. Suppose you have the source saved as "example-1.lsl": The preprocessor includes "lslstddef.h" into "example-1.lsl". It´s output will not contain the first line because the first line is a preprocessor directive.

The source ("example-1.lsl") is never modified by the preprocessor. Including "lslstddef.h" makes everything in "lslstddef.h" known to the preprocessor. The preprocessor produces an output which is a copy of the source with modifications applied to it as the directives, like the ones in "lslstddef.h", demand.

"lslstddef.h" has a lot of directives for the preprocessor. Most of them define something with "#define". When something is defined with "#define", it is a so-called define. Macros can also be defined with "#define". Macros are called macros because they are defines that take parameters.

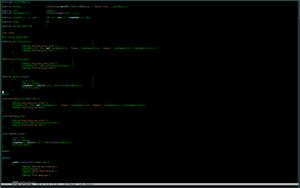

You may have noticed that in example-1_A, it doesn´t read "touch_start()" but "event touch_start()". That´s because I write my sources like that: Putting "event" before events makes them easier to find and the source looks nicer and is easier to read for me. In your sources, you can do it the same way or use something else than "event" or just write "touch_start()" instead. You can see what it looks like on my screen in the example picture --- I currently use green on black in emacs. It sticks out, and "event" makes a good search string that saves me searching for particular events.

The LSL compiler wouldn´t compile the source, not only because it doesn´t know what to do with "#include" directives, but also because it doesn´t compile statements like "event touch_start()". Therefore, "lslstddef.h" defines that "event" is nothing:

#define event

By including "lslstddef.h", cpp knows this and replaces all occurances of "event" with nothing, making "event" disappear from the result. This doesn´t mean that "event" is not defined. It´s a define. You can use the directive "#ifdef" with such defines:

#ifdef event

#define test 1

#endif

This would create a define named "test" only if "event" is defined. Much like cpp replaces "event" with nothing, it would now replace "test" with "1" because "test" is defined to be "1". With conditionals like "#ifdef", "#if" or "#ifndef", you can have code from your source put into the script depending on what is defined. For example, you can disable a block of code simly by putting "#if 0" at the start of the block and "#endif" at the end of the block. That´s easier than commenting out the whole region.

The library files in "projects/lib" in the git repository that accompanies this article make use of conditionals. The whole library file is included into the source, and defines are used to decide which functions from the libraries will be in the resulting script. You can see in "projects/rfedip-reference-implementation/src/rfedipdev-enchain.lsl" how it´s done.

The example script needs to set the physics type and to check if it was the owner of the object who touched it. It needs to know the position of the object the script is in and what agents are around. Add all this to the source, which is now example-1_B:

#include <lslstddef.h>

default

{

event touch_start(int t)

{

unless(llDetectedKey(0) == llGetOwner())

{

return;

}

vector here = llGetPos();

list agents = llGetAgentList(AGENT_LIST_PARCEL, []);

}

event state_entry()

{

SLPPF(LINK_THIS, [PRIM_PHYSICS_SHAPE_TYPE, PRIM_PHYSICS_SHAPE_CONVEX]);

}

}

With

#define event

#define SLPPF llSetLinkPrimitiveParamsFast

in "lslstddef.h", the preprocessor replaces "event" with nothing and "SLPPF" with "llSetLinkPrimitiveParamsFast".

Lists in LSL can be a pita to work with. You end up using functions to pick out list elements like llList2Key(). They combine with functions that return lists and lead to unwieldy expressions like

llList2Vector(llGetObjectDetails(llList2Key(agents, 0), [OBJECT_POS]), 0);

which combine with other functions like llVecDist() into

even longer expressions like

float d = llVecDist(llList2Vector(llGetObjectDetails(llList2Key(agents, 0), [OBJECT_POS] ), 0),

llList2Vector(llGetObjectDetails(llList2Key(agents, 1), [OBJECT_POS] ), 0));

if you were to compute the distance between two agents.

Lists are sometimes strided, and then you also need to remember which item of a stride is to be interpreted as what. You can use defines and macros to avoid this. If you were to compute the distance between two agents, you could use defines like:

#include <lslstddef.h>

#define kAgentKey(x) llList2Key(agents, x)

#define fAgentsDist(a, b) llVecDist(RemotePos(a), RemotePos(b))

Instead of the lengthy expression above, your source would then be:

float d = fAgentsDist(0, 1);

How much easier to write and read and maintain is that? How much less prone to errors is it?

The list used in example-1_B is not strided, simple and a local variable. Using a define and macros to make it useable is overdoing it, but I want to show you how it can be done.

You can put preprocessor directives anywhere into your source. They don´t need to be kept in seperate files. Traditionally, they had to be at the beginning of a line. This isn´t required anymore --- but do not indent them! They are not part of your code. They modify your code. You could see them as "metacode". They do not belong indented.

With example-1_B, four things can be defined to make the list of agents easier to use:

- a define for the stride of the list: "#define iSTRIDE_agents 1"

- a macro that gives the UUID of an agent on the list: "#define kAgentKey(x) llList2Key(agents, x)"

- a macro that gives the position of an agent on the list: "#define vAgentPos(x) RemotePos(kAgentKey(x))"

- a macro that gives the distance of an agent from the object: "#define fAgentDistance(x) llVecDist(here, vAgentPos(x))"

Those will be used for a loop that iterates over the list. This leads to example-1_C:

#include <lslstddef.h>

default

{

event touch_start(int t)

{

unless(llDetectedKey(0) == llGetOwner())

{

return;

}

vector here = llGetPos();

list agents = llGetAgentList(AGENT_LIST_PARCEL, []);

#define iSTRIDE_agents 1

#define kAgentKey(x) llList2Key(agents, x)

#define vAgentPos(x) RemotePos(kAgentKey(x))

#define fAgentDistance(x) llVecDist(here, vAgentPos(x))

int agent = Len(LIST_agents);

while(agent)

{

agent -= iSTRIDE_agents;

}

}

event state_entry()

{

SLPPF(LINK_THIS, [PRIM_PHYSICS_SHAPE_TYPE, PRIM_PHYSICS_SHAPE_CONVEX]);

}

}

Since the list is a local variable, the defines are placed after its declaration. You could place them somewhere else, maybe at the top of the source. Placing them inside the scope of the list makes clear that they are related to this particular list.

The define "iSTRIDE_agents" is the stride of the list, 1 in this case. Using "--agent;" in the loop instead of "agent -= iSTRADE_agents;" would be better, but it´s done for the purpose of demonstration. When you have a strided list, it is a good idea to always use a define for it: It doesn´t hurt anything and makes it easy to modify your source because there is only one place you need to change. With strided lists, use defines for the indices of stride-items so you don´t need to go through the macro definitions to make sure the indices are ok when you change the composition of the list. You can look at "projects/rfedip-reference-implementation/src/rfedipdev-enchain.lsl" in the git repository that accompanies this article for a source that uses a strided list.

"kAgentKey" is a macro. It´s a define that takes parameters. You might think the parameter is an integer which is an index to the list in which the UUIDs of the agents are stored. It is not: The parameter is used an index. The preprocessor doesn´t know anything about lists or integers.

There are no types involved with macro parameters as are with parameters of functions: When you declare a function in LSL that takes parameters, you need to declare what type the parameters are of. Macro parameters don´t have a type. The preprocessor replaces stuff literally. You could use a vector or some random string as a parameter with "kAgentKey". Cpp would do the replacement just fine and not give you error messages. It replaces text like a search-and-replace operation you perform with your favourite editor does. This can sometimes be limiting:

#include <lslstddef.h>

#define component(f) ((string)(f))

#define components(v) llSay(0, component(v.x) + component(v.y) + component(v.z))

default

{

event touch_start(int t)

{

vector one = ZERO_VECTOR;

vector two = <0.5, 0.5, 2.2>;

components(one + two);

}

}

The above source will turn into a script that does not compile because cpp replaces literally:

default

{

touch_start(integer t)

{

vector one = ZERO_VECTOR;

vector two = <0.5, 0.5, 2.2>;

llSay(0, ((string)(one + two.x)) + ((string)(one + two.y)) + ((string)(one + two.z)));

}

}

Introduce another vector and it works great:

#include <lslstddef.h>

#define component(f) ((string)(f))

#define components(v) llSay(0, component(v.x) + component(v.y) + component(v.z))

default

{

event touch_start(int t)

{

vector one = ZERO_VECTOR;

vector two = <0.5, 0.5, 2.2>;

vector composed = one + two;

components(composed);

}

}

You can find this example in the git repository that accompanies this article in "projects/components".

Macros are not functions.

Unfortunately, you cannot define defines or macros with defines: "#define NEWLIST(name, stride) #define list name; #define iSTRIDE_##name stride" will cause cpp to stop preprocessing and to print an error message. Maybe I´ll write a perl script to pre-preprocess my sources ... that might be easier than learning | m4.

"vAgentPos" and "fAgentDistance" are also macros and work the same way as "kAgentKey". The code to figure out which agent is the one furthest away from the object is simple, and I´ll skip over it. It gets interesting when the movement is implemented, so here is example-1_D, the complete source:

#include <lslstddef.h>

#define fUNDETERMINED -1.0

#define fIsUndetermined(_f) (fUNDETERMINED == _f)

#define fMetersPerSecond 3.0

#define fSecondsForMeters(d) FMax((d), 0.03)

#define fRANGE(_f) (_f)

#define vMINRANGE(_f) (<_f, _f, _f>)

#define fRandom(_fr) (llFrand(fRANGE(_fr)) - llFrand(fRANGE(_fr)))

#define vRandomDistance(_fr, _fm) (<fRandom(_fr), fRandom(_fr), fRandom(_fr)> + vMINRANGE(_fm))

#define fDistRange 3.5

#define fMinDist 0.5

default

{

event touch_start(int t)

{

unless(llDetectedKey(0) == llGetOwner())

{

return;

}

vector here = llGetPos();

list agents = llGetAgentList(AGENT_LIST_PARCEL, []);

#define iSTRIDE_agents 1

#define kAgentKey(x) llList2Key(agents, x)

#define vAgentPos(x) RemotePos(kAgentKey(x))

#define fAgentDistance(x) llVecDist(here, vAgentPos(x))

float distance = fUNDETERMINED;

int goto;

int agent = Len(agents);

while(agent)

{

agent -= iSTRIDE_agents;

float this_agent_distance = fAgentDistance(agent);

if(distance < this_agent_distance)

{

distance = this_agent_distance;

goto = agent;

}

}

unless(fIsUndetermined(distance))

{

distance /= fMetersPerSecond;

vector offset = vRandomDistance(fDistRange, fMinDist);

offset += PosOffset(here, vAgentPos(goto));

llSetKeyframedMotion([offset,

fSecondsForMeters(distance)],

[KFM_DATA, KFM_TRANSLATION]);

}

#undef AgentKey

#undef AgentPos

#undef AgentDistance

}

event state_entry()

{

SLPPF(LINK_THIS, [PRIM_PHYSICS_SHAPE_TYPE, PRIM_PHYSICS_SHAPE_CONVEX]);

}

}

There isn´t much new to it. "unless" is defined in "lslstddef.h" and speaks for itself. Just make sure you know what you´re doing when you use "unless(A && B)": Did you really want "A && B", or did you want "A || B"? Think about it --- or perhaps keep it simple and don´t use conditions composed of multiple expressions with "unless".

"fUNDETERMINED" and "fIsUndetermined" speak for themselves, too: There are no negative distances between positions, and what "llVecDist()" returns is never negative. There may be no agents on the parcel, in which case the list of agents would be empty. It´s length would be 0 and the code in the while loop would not be executed. The variable "distance" would still have the value it was initialized with when it was declared. What the furthest agent is would be undetermined, and that´s exactly what the code tells you.

The movement of the object should look nice. It looks nice when the object always moves at the same speed. "llSetKeyframedMotion()" doesn´t use speed. You have to specify the duration of the movement. Since the distance between the object and agents it moves to varies, the duration of the movement is adjusted according to the distance. The minimum duration "llSetKeyframedMotion()" works with is 1/45 second. "fSecondsForMeters" makes sure that the duration is at least 1/45 second through the "FMax" macro which is defined in "lslstddef.h": "FMax" evaluates to the greater value of the two parameters it is supplied with.

"vRandomDistance" is used to help with that the object shouldn´t hit the agent it moves to and ends up at some random distance from them, with a minimum offset. "PosOffset" from "lslstddef.h" works with two vectors and gives the offset between them as a vector. It´s one of my favourite macros because I don´t need to ask myself anymore what "offset" is supposed to mean: "here - there" or "there - here". The offset is simply "PosOffset(here, there)" when it´s an offset from here to there and "PosOffset(there, here)" when it´s offset from there to here. Like many other things, "llSetKeyframedMotion()" uses offsets ...

The important thing here is this: Why divide the distance and introduce another vector for the offset when you could simply do it in one line like this:

llSetKeyframedMotion([PosOffset(here, vAgentPos(goto) + vMINRANGE(0.5)),

FMax(distance / fMetersPerSecond, 1.0/45.0)],

[KFM_DATA, KFM_TRANSLATION]);

That´s one thing all these macros are for, aren´t they? Yes they are --- and you need to be aware of what they turn into. Cpp is designed to preprocess code for a C or C++ compiler, and those are probably a lot smarter than an LSL compiler. The C compiler optimizes "1.0/45.0" by replacing it with the result of the division. C has a ternary operator which makes life a lot easier not only for writing macros like "FMax": "#define MAX(a,b) (((a) > (b)) ? (a) : (b))" works for both integers and floats --- compared to

"#define FMax(x, y) (((llFabs((x) >= (y))) * (x)) + ((llFabs((x) <

(y))) * (y)))" and another one for integers.

The line from above turns into:

llSetKeyframedMotion([( (llList2Vector(llGetObjectDetails(llList2Key(agents, goto), [OBJECT_POS] ),

0) + (<0.5, 0.5, 0.5>)) - (here) ),

( ( (llFabs( (distance / 3.0) >= (1.0 / 45.0) ) ) * (distance / 3.0) ) + ( (llFabs( (

distance / 3.0) < (1.0 / 45.0) ) ) * (1.0 / 45.0) ) )],

[KFM_DATA, KFM_TRANSLATION]);

Letting aside that the code would be totally different when written in C, a C compiler would optimze it. I don´t know what the LSL compiler does.

Always be aware of what your macros turn into. The code from example-1_D turns into:

distance /= 3.0;

vector offset = ( < (llFrand((3.5)) - llFrand((3.5))), (llFrand((3.5)) - llFrand((3.5))),

(llFrand((3.5)) - llFrand((3.5))) > + (<0.5, 0.5, 0.5>));

offset += ( (llList2Vector(llGetObjectDetails(llList2Key(agents, goto), [OBJECT_POS] ),

0)) - (here) );

llSetKeyframedMotion([offset, ( ( (llFabs( ((distance)) >= (0.03) ) ) * ((distance)) ) + ( (

llFabs( ((distance)) < (0.03) ) ) * (0.03) ) )], [KFM_DATA, KFM_TRANSLATION]);

It´s still awful to read. What did you expect? The source looks nice and clear (not in the wiki but in emacs) because macros and defines are used, wich is one of the reasons to use them. More importantly, this code doesn´t employ numerous obsolete divisions because I checked the outcome and modifed my source.

Always check the script for what your macros turn into. It´s easy to check, just load the debugging version into your favourite editor. You can even use the built-in editor: change the "o" for an "i" and click on the "Edit" button as described earlier.

One thing is new in example-1_D: The defines that were used to make the list with the UUIDs of the agents usable are undefined with "#undef". It´s not necessary to do this, it´s tidy. As mentioned before, the list has local scope. That´s the reason I undefined the defines that relate to it. It ends their "scope" as well. You can create new defines with the same names outside this scope if you like. They are undefined and out of the way. Perhaps at some time, you include something into your source that happens to use defines with the same name. You would get an error message and have to go back to the perhaps old source and figure out where you can undefine them. It doesn´t hurt to undefine them right away and saves you time later.

Script memory and performance with cpp

You may have seen or written scripts that use variables as constants. Look at this example:

#include <lslstddef.h>

#define WITH_DEFINE_FLOAT 0

#define WITH_DEFINE_INT 0

#if WITH_DEFINE_FLOAT

#define ftest 1.0

#else

float ftest = 1.0;

#endif

#if WITH_DEFINE_INT

#define itest 1

#else

int itest = 1;

#endif

default

{

event touch_start(int t)

{

apf("---");

apf("the float is", ftest, "and the int is", itest, " with ", llGetFreeMemory(), " bytes free");

apf("the float is", ftest, "and the int is", itest, " with ", llGetFreeMemory(), " bytes free");

apf("the float is", ftest, "and the int is", itest, " with ", llGetFreeMemory(), " bytes free");

apf("the float is", ftest, "and the int is", itest, " with ", llGetFreeMemory(), " bytes free");

apf("the float is", ftest, "and the int is", itest, " with ", llGetFreeMemory(), " bytes free");

apf("the float is", ftest, "and the int is", itest, " with ", llGetFreeMemory(), " bytes free");

apf("the float is", ftest, "and the int is", itest, " with ", llGetFreeMemory(), " bytes free");

apf("the float is", ftest, "and the int is", itest, " with ", llGetFreeMemory(), " bytes free");

apf("the float is", ftest, "and the int is", itest, " with ", llGetFreeMemory(), " bytes free");

apf("the float is", ftest, "and the int is", itest, " with ", llGetFreeMemory(), " bytes free");

apf("---");

}

}

You can find this example in the git repository that accompanies this article as "projects/memtest/src/memtest.lsl".

It shows that the script needs 8 bytes more memory when you use either a float or an integer variable --- or both! --- instead of defines. That means when you declare a variable, declaring another one doesn´t make a difference, provided that the variables are of the types float and integer.

Either the test or the results must be flawed. One thing I wanted to know is whether it makes a difference if you have several lines with a number (like 1 or 1.0) vs. the same several lines with a variable instead of the number. This test doesn´t show that, perhaps due to optimizations the LSL compiler performs.

I use defines for constants --- not because it apparently may save 8 bytes, but because it makes sense. That you can switch between defines and variables for constants makes testing with "real" scripts easy.

Test with your actual script what difference it makes.

It seems obvious that you would waste memory when you use a macro that the preprocessor turns into a lengthy expression which occurs in the script multiple times. When it matters, test it; you may be surprised.

You can also try to test for performance. Provided you find a suitable place to set up your test objects and let the tests run for at least a week, you may get valid results. Such tests could be interesting because you may want to define a function instead of a macro --- or a function that uses a macro --- to save script memory when the macro turns into a lengthy expression. In theory, the macro would be faster because using a function requires the script interpreter to jump around in the code and to deal with putting parameters on the stack and with removing them while the macro does not. But in theory, declared variables use script memory. Perhaps the LSL compiler is capable of inlining functions?

When programming in C or perl and you have a statement like

if((A < 6) && (B < 20))

[...]

the part '(B < 20)' will not be evaluated when '(A < 6)' evaluates to

false. This is particularly relevant when you, for example, call a

function or use a macro:

if((A < 6) && (some_function(B) < 20))

[...]

LSL is buggy in that it will call the function or evaluate the expression the macro turned into even when '(A < 6)' evaluates to false. It´s impossible for the whole condition to evaluate to true when '(A < 6)' is false, and evaluating a condition should stop once its outcome has been evaluated. Calling a function takes time, and the function even might be fed with illegal parameters because B could have an illegal value the function cannot handle, depending on what the value of A is:

integer some_function(integer B)

{

llSay(0, "10 diveded by " + (string)B + " is: " + (string)(10 / B));

return 10 / B;

}

default

{

touch_start(integer total_number)

{

integer B = 20;

integer A = 5;

if(A < 8) B = 0;

if((A < 6) && (some_function(B) < 20))

{

llSay(0, "stupid");

}

}

}

This example will yield a math error because some_function() is called even when it should not be called. Such nonsense should be fixed. To work around it so that some_function() isn´t called, you need to add another "if" statement, which eats memory.

Depending on what the function or the macro does, your "if" statements can affect performance. This is independent of using a preprocessor, but since you may be tempted to use macros in your sources when you use a preprocessor, you may be tempted to use them in "if" statements and diminish performance more than you otherwise would.

Avoid creating "if" statements with conditions composed in such a way that expressions are evaluated which should not be evaluated.

Imagine how much processing power could be saved if this bug was fixed.

Inlining Functions

Inlining functions is possible with cpp. Last night I looked at some code I´m working on and inlined a function. With a line in the state_entry event telling me what llGetFreeMemory() returns, it said 30368 before the function was inlined and 31000 after the function was inlined: "Huh? Over 600 bytes saved?!"

632 bytes is almost 1% of the total amount of memory a script gets and about 2% of what this script has free. The function doesn´t return anything and doesn´t take parameters. It was a part of the code I had put into its own function to avoid convoluted code, and there is only one place the function is called from: Those are ideal conditions to inline the function.

As suggested before, do your own detailed testing with "real" sources, i. e. not with something written for the purpose of testing. Garbage collection appears to happen when functions are returned from, and timing issues may be involved. I wonder if there is garbage collection (triggered) every time a scope is exited. This means that through inling a function, your script may need more memory during runtime than it otherwise would --- or not.

Since potentially saving 1% of memory just by inlining one function is a lot, I decided that this article should have a section about it. It´s not only about saving memory: You probably have noticed that functions in LSL scripts eat memory big time. You may, out of necessity, have developed a bad style that avoids them as much as possible.

Please behold this --- rather artificial and intentionally not optimised --- example:

#include <lslstddef.h>

#define Memsay(...) llOwnerSay(fprintl(llGetFreeMemory(), "bytes free", __VA_ARGS__))

#define LIST numbers

#define iGetNumber(_n) llList2Integer(LIST, (_n))

#define foreach(_l, _n, _do) int _n = Len(_l); LoopDown(_n, _do);

#define iMany 10

#define INLINE_FUNCTIONS 1

list LIST;

#if INLINE_FUNCTIONS

#define multiply_two(n) \

{ \

Memsay("multiply_two start"); \

foreach(LIST, two, opf(iGetNumber(n), "times", iGetNumber(two), "makes", iGetNumber(n) * iGetNumber(two))); \

Memsay("multiply_two end"); \

}

#define multiply_one() \

{ \

Memsay("multiply_one start"); \

foreach(LIST, one, multiply_two(one)); \

Memsay("multiply_one end"); \

}

#define handle_touch() \

{ \

LIST = []; \

int n = iMany; \

LoopDown(n, Enlist(LIST, (int)llFrand(2000.0))); \

multiply_one(); \

}

#else

void multiply_two(const int n)

{

Memsay("multiply_two start");

foreach(LIST, two, opf(iGetNumber(n), "times", iGetNumber(two), "makes", iGetNumber(n) * iGetNumber(two)));

Memsay("multiply_two end");

}

void multiply_one()

{

Memsay("multiply_one start");

foreach(LIST, one, multiply_two(one));

Memsay("multiply_one end");

}

void handle_touch()

{

LIST = [];

int n = iMany;

LoopDown(n, Enlist(LIST, (int)llFrand(2000.0)));

multiply_one();

}

#endif

default

{

event touch_start(const int t)

{

Memsay("before multiplying");

handle_touch();

Memsay("after multiplying");

LIST = [];

Memsay("list emptied");

}

event state_entry()

{

Memsay("state entry");

}

}

When you make this with the functions not inlined, the output is like:

[07:39:09] Object: ( 50 kB ) ~> 55472 bytes free state entry

[07:39:15] Object: ( 50 kB ) ~> 55472 bytes free before multiplying

[07:39:15] Object: ( 49 kB ) ~> 55156 bytes free multiply_one start

[07:39:16] Object: ( 49 kB ) ~> 54976 bytes free multiply_two start

[...]

[07:39:20] Object: ( 49 kB ) ~> 54802 bytes free multiply_two end

[07:39:20] Object: ( 49 kB ) ~> 54802 bytes free after multiplying

[07:39:20] Object: ( 49 kB ) ~> 54802 bytes free multiply_one end

[07:39:20] Object: ( 50 kB ) ~> 54802 bytes free list emptied

With the functions inlined, the numbers look a bit different:

[07:39:41] Object: ( 51 kB ) ~> 57008 bytes free state entry

[07:39:51] Object: ( 51 kB ) ~> 57008 bytes free before multiplying

[07:39:51] Object: ( 51 kB ) ~> 56696 bytes free multiply_one start

[07:39:51] Object: ( 51 kB ) ~> 56696 bytes free multiply_two start

[...]

[07:39:56] Object: ( 51 kB ) ~> 56510 bytes free multiply_two end

[07:39:56] Object: ( 51 kB ) ~> 56510 bytes free multiply_one end

[07:39:56] Object: ( 51 kB ) ~> 56510 bytes free after multiplying

[07:39:56] Object: ( 51 kB ) ~> 56510 bytes free list emptied

This example has only three functions, and inlining them saves between 1536 (by state entry) and 1708 (after the script has run) bytes. I don´t understand how these numbers come together when I look at this page --- unless declaring a function does "automatically take up a block of memory (512 bytes)".

As said before: Test with your "real" sources.

When inlining functions, you need to make sure that the names of variables do not overlap. Otherwise, you may generate scripts in which your code unexpectedly works with different variables when it is suddenly inlined.

The LSL compiler will not give you error messages about already declared variables when you keep the enclosing brackets ("{}") from the function definition with the define: The opening bracket creates a new scope; the closing bracket closes the scope. (Creating a new scope doesn´t appear to use memory by itself. You could make use of that.)

This example keeps the brackets with the defines. This is to show that you can convert functions into macros with minimal effort and to keep the code identical for the test. The brackets from the functions are not needed with the defines. You should remove them from the defines when you inline functions. Removing them makes it less likely that overlapping variables go unnoticed.

You can find this example in the git repository that accompanies this article in "projects/inline".

Use Parentheses

When you look at this simple macro

#define times3(x) x * 3

it will multiply its parameter by 3. At least that´s what you think.

When your source is

#define times3(x) x * 3

[...]

int a = 5;

int b = times3(a);

llSay(0, (string)b);

[...]

it will print 15. Most of the time, your source will be more like this:

#define times3(x) x * 3

[...]

int a = 10;

int b = 5;

llSay(0, times3(a - b));

[...]

You would expect it to print 15, but it will print -5:

10 - 5 * 3 == 10 - 15 == -5

You need to use parentheses:

#define times3(x) (x) * 3

(10 - 5) * 3 == 5 * 3 == 15

You need even more parentheses, because:

#define plus3times3(x) (x) * 3 + 3

[...]

int a = 10;

int b = 5;

int c = 20;

int d = 15;

llSay(0, (string)(plus3times3(a - b) * plus3times3(c - d));

(10 - 5) * 3 + 3 * (20 - 15) * 3 + 3 == 15 + 3 * 5 * 3 + 3 == 15 + 45 + 3 == 63

Imagine you defined 'plus3times3' without any parentheses at all. You´d waste hours trying to figure out why your script yields incredibly weird results.

To get it right:

#define plus3times3(x) ((x) * 3 + 3)

[...]

int a = 10;

int b = 5;

int c = 20;

int d = 15;

llSay(0, (string)(times3(a - b) * times3(c - d));

((10 - 5) * 3 + 3) * ((20 - 15) * 3 + 3) == 324

There is quite a difference between 63 and 324. Use parentheses. I also always use parentheses with conditions because it makes them unambiguous:

if(A || B && C || D > 500)

[...]

is ambiguous. What I meant is:

if((A || B) && (C || (D > 500)))

[...]

That is not ambiguous. Since "&&" usually takes precedence over "||", it´s different from the version without parentheses. It probably doesn´t matter with LSL for the bug mentioned above, but I´m not even trying to figure it out. It does matter in C and perl at least because it has an effect on which of the expressions are evaluated and wich are not. Simply write what you mean, with parentheses, and don´t let LSL get you to develop a bad style. When you have the above "if" statements in a macro, use parentheses around "A", "B", "C" and "D", for reasons shown above.

Save yourself the hassle and use parentheses. My LSL scripts tend to have lots of parentheses, a lot of them not strictly needed because they come from macro definitions. They don´t hurt anything. Not having them can hurt badly.

How to use cpp

Finally, there is the question of how to use cpp. Cpp has a lot of command line parameters, and one particularly useful when using cpp for LSL is '-P'. The manpage of cpp says:

- -P Inhibit generation of linemarkers in the output from the preprocessor. This might be useful when running the preprocessor on something that is not C code, and will be sent to a program which might be confused by the linemarkers.

The LSL compiler is one of the programs that would be confused by line markers. You can see what the line markers look like when you preprocess one of your scripts with

cpp your_script.lsl

By default, cpp writes its output to stdout, i. e. it appears on your screen. Since it isn´t necessarily useful to have your LSL scripts appear on your screen like that, you may want to redirect the output into a file:

cpp -P your_script.lsl > your_script.i

This creates, without the line markers, a file that contains your preprocessed source. This file is named "your_script.i".

It´s that simple.

You can now write a script and use defines and macros and preprocess it. You can log in with your sl client, make a box, create a new script in the box, open the script, click on the "Edit" button and replace the default script that shows up in your favourite editor with the one you wrote and preprocessed.

It´s not entirely convenient yet because you still need to replace the script manually. You may also want to include files into your script, like "lslstddef.h". You don´t want to keep another copy of "lslstddef.h" with all your scripts and include it with '#include "lslstddef.h"'. You only need one copy of "lslstddef.h", and it´s a good idea to keep it in a seperate directory, perhaps with other headers you frequently use. You don´t want to specify in which directory you keep your "lslstddef.h" or other headers in all your sources: The directory might change.

Cpp has another parameter for this: '-I'. '-I' tells cpp where to look for files you include with something like '#include <lslstddef.h>'.

The difference between '#include "lslstddef.h"' and "#include <lslstddef.h>" is that '#include "lslstddef.h"' will make cpp look for "lslstddef.h" in the current directory (where your source is) while "#include <lslstddef.h>" makes it look in default directories. Such directories can be specified with '-I'.

If you haven´t pulled (i. e. downloaded) the git repository that accompanies this article, please do so now.

When you look into the "projects" directory from the repository, you´ll find a few directories in it:

.

|-- bin

|-- clean-lsl.sed -> make/clean-lsl.sed

|-- components

|-- example-1

|-- include

|-- lib

|-- make

|-- memtest

|-- rfedip-reference-implementation

|-- template-directories

`-- your-script

The "bin" directory contains tools; "include" is for files like "lslstddef.h" which you may want to include into many of your sources. The "lib" directory is much like the "include" directory; the difference is that "include" is for header files which provide defines and macros, while the "lib" directory is for files parts of which will be included into your sources when you set up defines which get these parts included. Those parts can be functions or defines or macros, and they are put together in files depending on their purpose.

For example, "lib/getlinknumbers.lsl" provides functions related to figuring out the link numbers of prims. "getlinknumbers.lsl" is a library of such functions, macros and defines. Since it´s a library, it´s in the "lib" directory: "lib" as in "library".

The "template-directories" directory is a template which you can copy when you start writing a new script. It contains directories to put particular files, like the "src" directory where you put the source you´re writing. It also has a "lib" directory which can hold files you want to include into one or several of the sources in the "src" directory, but which are too specific to the particular project to put them into the general "projects/lib" or "projects/include" directory.

Now when preprocessing your source with cpp, you may want to include "lslstddef.h". Since you´re starting a new project, you first copy the template directories:

[~/src/lsl-repo/projects] cp -avx template-directories/ your-script

‘template-directories/’ -> ‘your-script’

‘template-directories/bin’ -> ‘your-script/bin’

‘template-directories/dbg’ -> ‘your-script/dbg’

‘template-directories/doc’ -> ‘your-script/doc’

‘template-directories/lib’ -> ‘your-script/lib’

‘template-directories/src’ -> ‘your-script/src’

‘template-directories/dep’ -> ‘your-script/dep’

‘template-directories/Makefile’ -> ‘your-script/Makefile’

[~/src/lsl-repo/projects]

Make sure that all files and directories from the "template-directories" are copied. When something is missing, the build system may not work.

Now you can use your favourite editor, write your script and save it as "projects/your-script/src/your-script.lsl":

#include <lslstddef.h>

default

{

event touch_start(int t)

{

afootell("hello cpp");

}

}

As described above, you run 'cpp -P your_script.lsl > your_script.i'. What you get looks like this:

[~/src/lsl-repo/projects/your-script/src] cpp -P your_script.lsl > your_script.i

your_script.lsl:1:23: fatal error: lslstddef.h: No such file or directory

#include <lslstddef.h>

^

compilation terminated.

[~/src/lsl-repo/projects/your-script/src]

This tells you that cpp didn´t find "lslstddef.h". You need to tell cpp where to find it:

[~/src/lsl-repo/projects/your-script/src] cpp -I../../include -P your_script.lsl > your_script.i

[~/src/lsl-repo/projects/your-script/src]

Using '-I', you tell cpp to look for files that are to be included in the directory '../../include'. Since you do this from within the directory 'projects/your-script/src', cpp will look into 'projects/include' --- where it can find "lslstddef.h".

You can now look at the outcome:

[~/src/lsl-repo/projects/your-script/src] cat your_script.i

default

{

touch_start(integer t)

{

llOwnerSay("(" + (string)( (61440 - llGetUsedMemory() ) >> 10) + "kB) ~> " + "hello cpp");

}

}

[~/src/lsl-repo/projects/your-script/src]

So much effort to finally create such a little LSL script? No --- the effort here lies in giving you a reference which is supposed to help you to better understand the build system.

The effort to create an LSL script is no more than copying the "template-directories", saving your source in the "src" directory of the copy and running 'make'. You got a build system that does everything else for you when you run 'make'.

Make

Very nicely said, Make "is a tool which controls the generation of executables and other non-source files of a program from the program's source files."

Make uses a so-called Makefile. It´s called Makefile because it tells Make what it needs to do to generate files from files, and under which conditions which files need to be created.

Suppose you have written a source "example.lsl". It includes "lslstddef.h" and uses a function defined in "getlinknumbers.lsl". Without Make, you´d have to run cpp to preprocess your source and use sed to clean it up. Then you´d have to make an entry in the look-up table so you can have the script replaced automatically. If you want to see the debugging version of the script, you´d use astyle to format it nicely. Since you want to use the compressed version of the script, you´d use i2o.pl to create the compressed version. Finally, you modify the source to fix a bug you found when testing the script. Except for making an entry in the look-up table, you´d have to do all the steps again.

Suppose you modify "lslstddef.h" or "getlinknumbers.lsl". You´d have to remember that you did this and that you need to process your source again. You might have several sources because the object you´re working on has several scripts. Some of them do not include "getlinknumbers.lsl". Which ones do you need to process again because they include a file you have modified, and which ones not?

Make figures out from the Makefile how to process your sources. It also figures out which source files do need to be processed again and which ones don´t when files the sources include have been modified. Instead of running a bunch of commands, you simply run 'make'.

You do not need to understand Make or Makefiles to make creating LSL scripts easier. You find a suitable Makefile in the git repository that accompanies this article. The Makefile is "projects/make/Makefile".

This Makefile is symlinked into the template-directories. There is only one Makefile for all your projects. It works for all of them. This has the advantage --- or disadvantage --- that when you change the Makefile, the changes apply to all your projects.

Should you need to adjust the Makefile because a particular project has special requirements, remove the symlink to the Makefile from the projects´ directory. Copy make/Makefile into the projects´ directory and then modify the copy.

How to use Make

Enter the directory of the project you are working on and run 'make'. That´s all. The section "Repository" has some details.

You can run make from within emacs: M-x compile. Emacs will show you the output in a buffer. If there are error messages, you can move the cursor to the error message and press enter and emacs shows you the line in the file that triggered the error message. To compile again, you can use 'recompile': M-x recompile. You can make a key-binding for that with something like '(global-set-key [C-f1] 'recompile)' in your ~/.emacs and recompile your source conveniently from within your favourite editor --- which by now is, of course, emacs --- by simply pressing Ctrl+F1.

Make "your-script.lsl"

Assume you want to try out the "your-script.lsl" source from above: Enter the directory for the project ("/projects/your-script/") and run 'make' --- or load the source into emacs and run make from within emacs. I´ll do it with emacs:

C-x-f ~/src/lsl-repo/projects/your-script/src/your-script.lsl

M-x compile

Emacs now asks you for the command to use and suggests 'make -k'. This won´t work because Make needs to be run from within one directory above, i. e. "~/src/lsl-repo/projects/your-script/" rather than "~/src/lsl-repo/projects/your-script/src". So instead of 'make -k', you need to use 'cd ..; make -k'. Just edit what emacs suggests, then press Enter.

Emacs will check if it has any files that haven´t been saved yet because you might have forgotten to save your source. If there are files to save, it will ask you whether to save them or not. Then emacs will run make and open a new buffer to show you the output of 'make':

-*- mode: compilation; default-directory: "~/src/lsl-repo/projects/your-script/" -*-

Compilation started at Sat Jan 11 15:21:51

cd ..; make -k

generating dependencies: dep/your-script.di

generating dependencies: dep/your-script.d

preprocessing src/your-script.lsl

8 153 dbg/your-script.i.clean

Compilation finished at Sat Jan 11 15:21:52

When you need to process your script again, you can also do this within emacs: M-x recompile. I have bound the function to C-f1, so I´d just press Ctrl+f1. For an example of how to do such a keybinding, please see "emacs/emacs" in the git repository that accompanies this article.

To try out the script you just created in sl, you can switch to your sl client and make a box. Make a new script in the box and open the new script. In the built-in script editor, type into the first line:

// =your-script.o

Now click on the "Edit" button of the built-in script editor. Provided that you successfully prepared the automatic replacement of your scripts as described above, the compressed version of your script appears in the built-in script editor. It will be compiled by the sl server and run --- or you will see an error message in the built-in script editor.

astyle

Astyle is a nice tool to format your code so that it´s easier to read. Since you write your sources so that they are easy to read anyway, you may not want to use it to reformat your sources --- unless some lines are so long that they don´t fit very well on wiki pages maybe.

However, the output of cpp isn´t too readable. Therefore, the build system uses astyle to reformat it when it generates debugging versions of the scripts (in dbg/*.i). This is entirely optional.

sed

Sed is used by the build system for a number of things. One of these things is what you might call postprocessing: Preprocessing your sources can leave artifacts in the output of cpp due to the way macros (see "lslstddef.h") are used.

Sed is used to remove these artifacts, i. e. lines like "};" and ";". A closing bracket doesn´t need to be followed by a semicolon, and lines empty except for a semicolon are obsolete. Postprocessing with sed replaces "};" which "}" and removes empty lines.

The rules used by sed for this are in a so-called script which you can find as "projects/make/clean-lsl.sed" in the git repository that accompanies this article.

i2o.pl

The perl script i2o.pl comes from the source of the Open Stargate Network stargates.

It "compresses" your scripts by removing whitespace and by shortening the names of variables. Since variables are renamed, the compression cannot be undone entirely (like with astyle). Perhaps "shrinking" is a better word for this than "compressing".

The point of shrinking your scripts is saving memory. You cannot save (i. e. upload) a script (or contents of a notecard) that takes more than 64kB: The script (or the notecard) gets truncated, probably by the sl server. Cpp already removes comments and blank lines from the source, and i2o.pl shrinks the script further.

Side effects of shrinking are that sometimes a script appears to need less script memory to run, and shrunken scripts appear to run a little faster. I haven´t really tested either, and both doesn´t seem to make sense because the scripts are compiled to byte-code anyway. Test it if you like; it would be interesting to see the results. I always use the shrunken version unless I need to look at the debugging version, and it works great.

git

Git isn´t really involved here, other than that the build system and examples described and used in this article --- and the article itself --- is available as a git repository for your and my convenience. It´s convenient for you because you can easily download it.

It´s convenient for me because git makes it easy to keep the repository up to date. If several people would contribute to what is in the repository, it would be convenient for them as well because git makes it easy to put together the work done by many people on the same thing.

It can be convenient --- or useful --- for you because it makes it easy to keep track of changes. When you create a git repository from or for the sources of a project you are working on, you can not only apply (commit) every change to the repository accompanied by an entry in a log which helps you to figure out what you have done.