Difference between revisions of "Brainstorming"

Dzonatas Sol (talk | contribs) |

Dzonatas Sol (talk | contribs) |

||

| Line 562: | Line 562: | ||

::C) sim4802.eu.agni.lindenlab.com | ::C) sim4802.eu.agni.lindenlab.com | ||

:URL "A" points directly to the simulator, as it currently does now. URL "B" points to a proxy in the United States. URL "C" points to a proxy in Europe. The simulator or the proxy may redirect the viewer to the best URL. The redirection ability allows a simple form of traffic reshaping on demand. | :URL "A" points directly to the simulator, as it currently does now. URL "B" points to a proxy in the United States. URL "C" points to a proxy in Europe. The simulator or the proxy may redirect the viewer to the best URL. The redirection ability allows a simple form of traffic reshaping on demand. Each URL above, AB&C, all access the same simulator (sim4802). | ||

== Future Integration == | == Future Integration == | ||

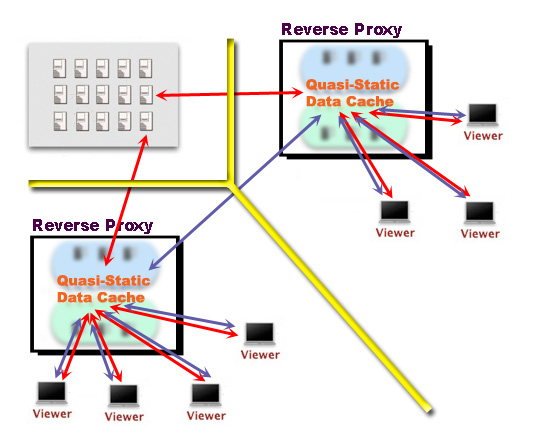

:The conceptual design is based on well known paravirtualized machines for further integration. In this design, we paravirtualize the grid and not just part of the simulation. The immediate implementation allows us to step more gradually to this future design. We create the common paravirtual API structure and the virtual machine that computes the simulation. We could potentially use an already well known paravirtual machine or create one specifically to run the simulation. The advantage of a well known paravirtual machine carries general stability. The hardware layer is completely abstract and no longer a part of the architectural grid design. The state of any simulation is transportable across the paravirtual grid. The reverse proxy, at this point, has evolved to a virtual machine. | :The conceptual design is based on well known paravirtualized machines for further integration. In this design, we paravirtualize the grid and not just part of the simulation. The immediate implementation allows us to step more gradually to this future design. We create the common paravirtual API structure and the virtual machine that computes the simulation. We could potentially use an already well known paravirtual machine or create one specifically to run the simulation. The advantage of a well known paravirtual machine carries general stability. The hardware layer is completely abstract and no longer a part of the architectural grid design. The state of any simulation is transportable across the paravirtual grid. The reverse proxy, at this point, has evolved to a virtual machine. | ||

Revision as of 15:17, 12 October 2007

| Architecture Working Group main | Project Motivation | Proposed Architecture | Use Cases | In World Chatlogs |

This page is all about brainstorming about the upcoming architecture. Add your thoughts here in no particular format. Can be use cases, requirements, scenarios. Maybe shouldn't be too long but long enough to get your idea across. Can also be implementation details maybe but in the lower section.

Usage examples and requirements

General architecture

- always keep the scary numbers of Project_Motivation in mind, ie. design for scalability.

- allow to run a small grid on my laptop.

- allow big-iron sites (eg. LL) to run as proxy cache for small but authoritative private grid.

- allow plug-ins as a general principle for extensibility by attachment; this should apply at all levels.

- Talk to other online virtual worlds to discuss interconnectivity, at least to some degree. For instance, with one online ID and perhaps registration with each virtual world, residents could inhabit seamlessly Second Life then possibly World of Warcraft or any other virtual world, in the same way online surfers can go from one website to another. In short, let there eventually be one huge interconnected 3d environment, with each world as distinctive as a website on the net.

- Integrate within Second Life a platform to allow residents to create their own virtual world which can then be connected via a personal server to the grid. If this is not entirely possible then create a download so a world can be created offline to be later uploaded to a personal server and then be added to the grid. The development of such software could be a challenge to present to the many resident coders. People with technical knowledge should not be the only ones able to add their own sim to the grid. This should be developed in a way to enable everyone to do so.

- Enable short-term sandboxes launched from within a viewer - think like a traditional LAN party for a multiplayer game, this would do for short social events etc, for 24/7 sims allow hosting by 3rd parties and those 3rd parties (like web hosts) charge for their server space and administration.

- Make Second Life more democratic. Rather than having rules and regulations imposed on residents, allow democratically elected members to represent residents wishes and aspirations, and even let such individuals govern regions. Essentially, Linden Labs should let go of its junta like hold of Second life and allow residents to govern themselves. In its present state Second Life does not represent a free or democratic entity and this lack of freedom is becoming ever more tangible to residents.

Interoperability

- allow different formats for objects or assets in general. You might need different viewers for that (e.g. WoW and SL or EVE are quite different in their structure) but you should at least be able to share an identity. You might need different agents though as e.g. in games you want to store game information in the profile and the profile is attached to an agent. (See Viewer Refactoring below to help with this.)

- for interoperability, objects at the protocol level must be self-describing to allow transformation to the local format.

- allow different types of regions and region formats. Again as an example, EVE and WoW have different understanding on how to partition the world and how to implement it.

- look at things like Multiverse or Metaplace to see how these can be interconnected.

- Use some common IM format to IM between agents. Jabber comes to mind but should probably also be pluggable. Maybe integrating existing multi-format clients (of open source) is an option.

Commerce

Ensure that the entities used in commerce are supported

- currencies or other forms of payment accepted (object trading, external payment systems)

- exchanging rates and such

- timestamped transaction records (this was exchanged for that)

- unforgeable documents for contracts

Objects and Assets

- allow objects to only be able to rez on certain regions (adds to the agent's restrictions)

- AND/OR limit object rezzing to specific groups of objects (give region owner ability to control what content is allowed in their regions)

- allow assets to be transferred between agent domains

- allow assets to be accessed from multiple agent domains

- allow truly distributed asset storage

- Think beyond the limitations of prims and sculpties by allowing items made in 3d modelling software to be directly imported onto the grid. Other online virtual 3D environments allow this.

- allow licenses to be attached to objects such as CC licenses etc. Could be some license field in the protocol.

- possibility to add proxies for assets. People running their own servers might want to reduce disk access on the sim machines by adding a second machine with a proxy. Proxies improve response time on webservers with heavy access a lot, so it should work for sims with high traffic, too.

- allow geometry standards to be used for objects. SL doesn't have a tool like Sketchup and so needs to be more open to other existing geometry creation tools (sculpties are too insufficient).

- It is likely that *no* geometry standards will ever be sufficient. Therefore, don't waste time on defining geometry standards. Instead, focus on designing a way of making the suite of available geometry standards extensible simply through a posteriori addition, without requiring a priori definition.

Permissions

- What does a creator need to define? It should be possible for the creator to e.g. define that an object is only valid within a certain grid. The question here might be what makes up a grid. Probably it means a certain agent domain. What does that mean to the user though? If the user bought that object I'd think that he/she should really own it and be able to copy it to other agent domains and other grids. The problem might be what happens if these have lesser security policies and it got copied? (of course in general there is no way to prevent it anyway, I am talking here about different policies for different grids). Should it be some new permission which say "copy to other grids"? When buying such an object you can decide then whether it's worth it or not.

- We already discussed DRM in general and the opinion was that it should be not hardwired into the protocol. Of course it should be possible to plug it in if people think it makes sense.

- What about being able to link from objects. E.g. it is annoying right now that an object cannot be mod,copy,trans but "trans" meaning here that all the copied will be deleted once you give it to another person. Because of that one permission is usually missing. Either you cannot copy it or you cannot transfer it. So here is how it might be

* Agent buys object which is full perm but it should not be allowed to sell multiple copies of it, only the one you bought. * Agent rezzes multiple copies and eventually modifies them * Agent give the object to another agent (or sells it). When he does this all the copies should be deleted. It could be implemented as links to the original object or the object itself is more or less the group of all rezzed items. It makes sure the user can do whatever he wants but cannot just make money from selling multiple copies if not bought.

- Make derivations possible, like e.g. IMVU has it. You buy some object and modify it. You then can resell it but the original creator still gets some fraction for each sold copy.

- For all the mentioned permissions the protocol should provide a means of specifying these capabilities. If you want to sell derivations the other agent domain also needs to support it and needs to be trusted. Possible new permissions could be 'sell copies' and 'franchise' with additional minimum percentage the creator defines (modifiable upwards by the next owner, but not downwards). If object is copy and transfer, seller's copies get deleted with a transfer, if object is set to sell copies, seller's copies are not deleted. Maybe the definition of a minimum price might be interesting, too.

- Access controls beyond the walls of a single managed protection domain like the Linden grid are actually doomed to failure, for numerous reasons detailed by security and cryptography heavyweights who have explored DRM. Nevertheless, extending existing SL object permissions to "trusted" external sites is still perfectly feasible ... but it would be a mistake to believe that such access restrictions would be anything but temporary. This fact will have to be made clear to asset creators to avoid legal problems and discontent later on, as a social measure. As a limited technical measure, a Local_Distribution_Only permissions bit will probably be required as well.

Identity

- identity should be pluggable

- [Please build a list of desired identity verification systems]

- OpenID

- various grades of verification should be possible

- RL Identity Verification:

- "Is the user exactly who he/she claims he/she is?"

- Very strong verification. Permanently links ID of account to real-world ID of user.

- Age Verification:

- "Is the user old enough to be on this system?"

- Weak verification. Minimum amount needed to maintain compliance with child online access laws.

- Unique Verification:

- "Is this user unique, or is it an Alt?"

- Weak verification. Minimum needed to enforce bans due to TOS violations.

- Very difficult to enforce due to ease of changing commonly-used identifiers: IP address, MAC address, hardware serial numbers/profiles, etc.

- Virtual Identity Verification:

- In a multi-site, multi-grid, multi-world and multi-national distributed architecture, RL verification is not feasible.

- RL identity must be safeguarded for residents living under conditions where human rights are not protected.

- Relevant question: "Is this person XXXX the same XXXX I was talking to yesterday on a different world?"

- RL Identity Verification:

- Verification must not require sensitive data to pass through insecure systems or require storage of sensitive data (link virtual identity <--> RL identity is sensitive data, too)

- It should be possible to attach an unlimited amount of agent from different domains to one identity

Privacy and control

- Motivation

- The single-grid SL implemented privacy and control in a manner not untypical for centralized, managed systems, effectively denying privacy as a concept and exercising control (lighthanded, but nevertheless control) over world inhabitants. The policy also lead to implicit responsibility for the actions of inhabitants, on the basis of "if you police it, then you are responsible for any infractions".

- In a worldwide, distributed, and massively scaled universe of interconnected 3rd party worlds and grids, this current approach is no longer tenable, for a number of reasons:

- RL identity verification is neither generally feasible nor in many instances desireable (see Identity, above).

- Attempts to enforce strong guarantees suffer from the same fundamental weakness as DRM, and lead to a similar arms race.

- The laws of California are not generally of interest in the rest of the world.

- The operators of individual grids will not in general have control rights elsewhere, let alone legal jurisdiction.

- In some countries more than others, citizens place extremely high value on individual privacy, often protected by law.

- Privacy is relevant to personal security as well, thwarting stalkers and even being helpful for domestic security.

- Social mores vary immensely across the world, so imposing those of one society on another is misplaced and generally not desireable.

- Open source denies the possibility of adding covert wiretaps and similar measures, so distributed monitoring is infeasible.

- Policing and assuming responsibility for the actions of others is itself a recipe for discontent and ultimately failure.

- The above suggests that attempting to implement the privacy and control policies of the original SL in the new architecture would not be an appropriate goal. Instead, privacy and control might better be focussed in a different direction, as below.

- Requirements

- The following requirements could be well suited to a universe of multiple distributed domains and high cultural diversity:

- Implement strong(er) privacy guarantees

- Although "evil" attached worlds and grids will unavoidably be able to subvert privacy measures within their own domains, by default there should be a presumption of privacy to cater for those societies where privacy is valued. This includes:

- Built-in volumetric barriers, for example Argent Stonecutter's remarkably simple [Parcel Basements].

- End-to-end encryption for non-vicinity communication channels.

- Encryption of client-server traffic to defeat in-transit monitoring.

- Disclaim control / gain immunity of common carrier

- "Common carrier" immunity is historically only recognized for PTTs, and has been slow in moving into the realm of ISPs and higher level services providers. Nevertheless, commonsense offers that you cannot control what you cannot see, and you cannot reasonably be held accountable for what you do not control. Barriers to visibility added for the sake of privacy can therefore also deliver a useful defence and tangible business benefits, particularly in domains where personal freedoms are expected.

- Limit the amount of logging, and keep permanent records for financial transactions alone.

- Replace "guardian responsibility" by "personal responsibility":

- Increase the power of parcel and region owners to detect the sources of abuse and remove it.

- Increase the power of individuals to make unwanted effects/objects disappear from client visibility.

- Add arbitrary owner-defined land tags to provide flexible classification, and land entry barriers.

- Add personal interest tagging, to trigger land barriers and avoid unwanted surprises.

- While the above are technical measures relevant to architecture, many coercive forces are arrayed against privacy and towards stronger control over virtual citizens, so be prepared for hard times and pressure.

Viewer

- allow all sorts of viewers, from 3D to cellphone to web sites

- Create a viewer with the absolute minimum functionality possible (i.e. core client functions) and then make it optionally extensible based on client capability.

- Put core protocol functionality (login/logout, movement etc) into a library

- Make the server only send client data it has asked for (client sends "i want prims" message and get prims etc)

- Should be trivial to integrate with other systems if this is done correctly - IRC gateways for IMs for example - note: there should be thoughts about SPAM prevention from the very beginning. A "We can think about that later" wont work here!

- Allow the client to reassign controls and common functions to a Human Interface Device (gamepad, joystick, customized keyboard, a Bluetooth-connected Wii Remote, etc.)

- Create a viewer with the absolute minimum functionality possible (i.e. core client functions) and then make it optionally extensible based on client capability.

- Client-side Script runtime

- In keeping with the idea of the core protocol functionality being a mere library, it could be possible to make the rest of the viewer very modular in this sense and use a scripting language as the glue, or to enable the scripts to execute library calls directly depending on configuration

- scriptable chat & movements avatar (bot)

- advanced graphical hud

- This needs clarification - it seems a good idea to allow 2D sprites rather than only 3D objects built on prims to be mapped onto the HUD, it also seems a good idea to empower the scripts with better capabilities for drawing on such a 2D plane

- Functional refactoring of viewer

- The current SL viewer implements functions from a large number of domains, many of them concerned with maintaining avatar presence (login, avatar state and behaviour, communications/protocol and event handling, etc), and many others concerned with the actual viewing (managing and rendering a graphic 2D UI and 3D world representation). It is large, monolithic and growing, and quite inflexible in this state, because new clients can be supported only by full code replication, and only one can run at a time per presence because of this. Large-scale refactoring is required here if we desire something better.

- To cater for the stated requirement of allowing all sorts of viewers and running client-side scripting in a flexible manner, then at least the presence-related functions need to be extracted from current graphics code. This would then permit the following scenarios, for example:

- [Use cases]

- An intermittent mobile viewer could periodically manipulate or monitor an avatar presence currently logged in from home.

- Within the home, secondary screens running on home theatre equipment would offer a very appealing view of the world.

- A scripted presence could run without any 3D viewer at all, for example a shop transactions handler.

- An audio-only mobile viewer could attend an SL live music concert and still pay the musician's tip jar.

- An event-only viewer could send events to the presence corresponding to RL events, eg. from musical instruments.

- The machinima community could run multiple cameras observing a single presence for great flexibility.

- Under crowd conditions (eg. most live music events), a low-detail viewer could be switched in without relogging.

- SL-based games and sports would be able to run their own bespoke viewer and UI, without reworking presence code.

- A client could be used for remote changing/editing 'information'. Think of a 'blog editor' for SL, for text displayed by hovertext, prim displays and future SVG or HTML displays.

- To achieve this, the "viewer" needs to be redesigned as a view attached to a presence (more precisely, to the client-side presence handler, because the real presence is more properly considered to be server-side), in an N:1 relationship. Client-side scripting would then also attach to the presence handler in a similar manner, for UI control, as a proxy for human input, and also for distributed computation at the request of servers.

- Or, as another way of looking at it, the presence handler (currently a part of the monolithic viewer) needs to become an independent multiplexer process, with graphics viewers and other applications and scripts attaching to it as needed, or not at all if no view is required. The development of many other diverse and quite minimalist viewers then becomes significantly easier.

Agents

- The envisaged massive scaling requires new forms of item organization, search, and access to cope with vast expansion. The current inventory mechanism is already stretched right now, so this issue needs early design attention.

- it should be possible to manage inventories of all your agents under one identity. The idea is to e.g. being able to move items from one inventory to another

- some organisation providing agents should be able to define on which regions an agent is allowed to connect, what he/she can do with object in their inventory

- an agent domain should be able to have certain inventory pools which might be shared among agents (like the library in SL today)

- it should be possible to import and export friends lists or groups of friends

- it should be possible to maintain a friends list among various agent domains (related to import/export maybe but as protocol function)

- it should be possible to copy inventory from one agent domain from another if the object structure is compatible.

- an agent needs to provide various methods of verification

- an agent domain needs to define presence information among an agent's friends list. This should even be possible among various agent domains.

- Each agent needs to be able to define certain permissions on what other agents or groups of agents are allowed to see about him (online status, identity information, etc.)

possible restrictions between regions and agents

- An agent domain should be able to define on which regions an agent is allowed to connect

- An agent domain should be able to define which objects are allowed to be rezzed on which region (e.g. company objects only on company regions etc. This might be related to object pools as described above).

- A region might allow only certain agent domains to connect

- A region might allow anybody to connect but the rights of that agent might be limited depending from where it comes and e.g. which verification mechanism the agent provides.

- Standard specification of rights/permissions in a serialisable form that can be edited by the viewer and uploaded to an authorative per-domain server?

Regions

- allow regions of arbitrary size and form

- allow portals and landmass-style connections between regions or a mix of both

- region should be able to decide which agents to let in depending on the grade of verification (age, RL identity, financial, ... )

- User defined topology. Like bookmarks, but in 2D. Crossing a custom boundary would result in a "walking teleport"

- Multiple instances of a region within a topology. Everyone can have a front row seat for the show.

- Allow as many avatars as will fit in the virtual space of a region. Don't limit the population of a region based on architectural limitations.

- Inactive land acreage and passive prims cost only the price of disk storage. Scripts cost the price of CPU power. Avatars cost the price of bandwidth. In the LL grid, these issues are all coupled together, but this will change in a distributed world.

- Beyond the confines of the Linden grid, land, prim, script and avatar limits should not have a-priori restrictions that are relevant only to the static Linden grid. In the distributed world, local resourcing will determine which limits and costs are appropriate. One fallout from this will be that inactive land acreage will cost next to nothing, since the price of storage is in freefall.

Virtualization of regions

- Motivation

- The decision to map virtual regions to physical servers statically in the first version of Second Life has hardwired a number of implementation choices that impact on performance, resourcing and scalability. Roughly, and very simplified:

- Passive land acreage (and cost) became limited by (and linked to) local disk storage.

- Available prim counts became limited by local CPU power, and hence are non-scalable.

- Concurrent scripting load became limited by local CPU power, and hence is non-scalable.

- Server-side scalability for events became limited by local CPU power and bandwidth, and hence is virtually blocked.

- Hardware utilization and overall scalability plummetted, as unused local resources cannot assist elsewhere.

- The need for region handover at sim boundaries created numerous complexities and opportunities for problems to arise.

- Asset serving and many other services could not scale automatically with population growth nor with grid expansion.

- Local resourcing means that improvements made to a large grid cannot benefit its residents when visiting an attached grid.

- The new focus on decentralization and scalability offers an opportunity to overcome all of these issues, and provides the motivation to do so. However, to achieve this does require relinquishing attachment to the conceptually simple but inherently flawed approach that is static mapping of virtual resource usage to physical resource provision.

- Evolution

- Fortunately, creating a dynamic infrastructure is not inconsistent with the current implementation. The following observations highlight this:

- A dynamic server farm still requires some static physical topology, and a 2D grid is not horribly suboptimal at current levels of scaling.

- Additional networking components are easy to attach to an exiting network if reduced latency is desired for dynamic services.

- Existing grid servers running local sim services could trivially offer their unused resources for non-local use.

- Very busy sims would degrade an additional dynamic infrastructure gracefully, simply by not contributing to it.

- This suggests that an evolutionary transition to a dynamic infrastructure is possible, alongside normal operation of the static grid. It is hard to predict how issues like economics might faire alongside infrastructure that is evolving towards less-constrained dynamic resourcing, but then nothing at all can be predicted anyway about the future in a system that is being scaled to the scary numbers offered in Project_Motivation.

- Design principles

- In some ways, a dynamic resourcing architecture is simpler than a static one, because resource boundary issues do not need to be considered as in the static design. More importantly however, a bigger simplification occurs a little down the road, because static systems simply do not scale along all dimensions and therefore evolution rapidly hits a brick wall. This means that the simpler static system does not really address the right problem at all, once all-axis scalability is added to the requirements. In contrast, dynamic systems are inherently expandable, even along unforseen dimensions because new tasks are handled as easily as old ones.

- The underlying principles for a dynamic design are relatively simple, and not hard to implement: (*)

- Make all work requests stateless (a region for example becomes merely a request parameter).

- Throw all work requests into a virtual funnel (aka. multi-priority task buffer and serializer).

- Place all servers in a worker pool, ready to take tasks out of the funnel as they appear.

- Hold all persistent world state in a distributed object store which is cached on all servers.

- Workers run a task, update world state if required, and send events which may create more work.

- Although it is a departure from the static design, there is nothing really radical in such a scheme, and many standard software components can be used to implement it, including the very efficient and easily managed web-type services.

- (*) Note that these are only principles. In an actual system design, this kind of scheme is repeated at many levels, in breadth and depth.

- Benefits

- A number of important improvements are gained immediately from such a dynamic infrastructure:

- Regions scale arbitrarily for events, up to the limit of resource exhaustion of the entire grid.

- The limits to desired scalability in any dimension are determined by policy, not by local limitations.

- Server death does not bring down a region, and the overall grid suffers only graceful degradation.

- Adding/upgrading grid server resources benefits the entire grid rathering than favouring one region.

- Regions have no physical edges, so complex functionality is avoided and no sim handover problems can occur.

- Regions can have any size or shape of land whatsoever, and land cost (not price) drops to that of disk storage.

- Available prim counts are no longer tied to land acreage, allowing both highly dense and highly sparse regions.

- Events can be held in previously unused areas of the world (eg. regattas in mid-ocean) without prior resourcing.

- Small remote worlds and subgrids can be visited by large-grid residents, because local caching still operates.

IM

Scalability of Instant Messaging (IM) is required along all the dimensions given in Project_Motivation, with added requirements:

- Messaging must be extended to the distributed worldspace of attached regions and identities

- Messaging fanout capability needs to grow automatically with world population to avoid endemic lag

- Alongside increases to IM extent, we also need recipient-end facilities for IM denial and filtering

- Local vicinity messaging requires additional controls to tame crowd spam at very large events

The magnitude of this task, as shown by the inability of the current IM system to handle even current population levels, suggests that it may be best not to reinvent the wheel of IM, but merely to interface to existing open-source messaging systems.

Implementation thoughts

Decentralized assets

- How does a locally-run, disconnected grid provide texture/animation uploads or anything of use without the centralized system to accept the upload fee, read and update the user's inventory, or receive the new asset information?

- Local database backend stores all the local content, any content on the remote asset servers with appropriate permissions (perhaps a "can download" flag?) can be downloaded for use on a local stand-alone grid

- Any new assets, or locally modified assets can be re-uploaded

- "Can download" must imply "Can modify" for practical reasons (DRM will not work!)

Regions

- if we have different region domains will each of them have their own map or would it somehow work to connect certain region domains together while it would still be possible to grow one domains space? Maybe a simplified map will show connected region domains, think of a tag cloud,with the current region+domain largest in the middle, around it the connected domains with only the crossing region(s) displayed.

- Can we see into neighboring regions using a low LOD mesh dynamically created to represent the land and objects in a region?

- Virtualize the regions. If no one is in or near a region, don't waste hardware on it. If a region gets too busy, dynamically split it onto two servers, each taking half of the area.

- Allow arbitrary assignment of geography to processing resources - including dynamic migration.

- As someone who's developed 3D virtual world simulations before (and always wanted to create something like SL), the first thing that hit me when I entered SL for the first time was that sims were visible to residents (and scripts).

- A sim is an implementation detail and residents should never need to know they exist. Making that particular initial implementation choice (to divide processing power into a regular grid and distribute it statically over separate servers) has now locked it in for the future - as removing the concept of regions would break a lot of scripted content.

- However, it is still possible to lay a foundation that can deal with arbitrary assignment of geographic simulation to processing units (including dynamically) transparently and then add a 'backward-compatibility' layer over it which simulates the familiar square regions for legacy content (virtualizes them as suggested above).

- Such a system could also be implemented to allow non-flat and non-contiguous geography (e.g. like planets, for example). Current continents could be mapped into small surface patches in a 'legacy' area.

- It would also allow the possibility of distributing the various aspects of simulation of a geographic area differently - such a physics, collision, scripts, occlusion etc. (for example, if there are few physical objects over a large area, one processing unit could compute the physics for the whole area, while a larger number of processing units execute the area's scripts if required)

- See Virtualization of regions for a scheme that addresses this.

- As someone who's developed 3D virtual world simulations before (and always wanted to create something like SL), the first thing that hit me when I entered SL for the first time was that sims were visible to residents (and scripts).

Currency

- how will virtual currency be handled in a distributed grid architecture?

- Will LL still support L$ in future, or will it be phased out? (perhaps a virtual currency should have no special place in the grid at all - just as there is no special currency on the web ?)

- L$ exists as "a limited license right" within Second Life and therefore only makes sense within the official grid(s) owned by LL.

- Privately-owned grids could be responsible for issuing their own licenses, to throttle their clients' use of those private resources.

- Allowing private grids to issue their own limited license rights for use of their hardware makes it possible to disconnect them from many if not all centralized systems.

- Such disconnections may allow us to implement a fully localized grid for use on private intranets or on a standalone system.

- By their nature, private licenses would be mutually incompatible with the official "L$" licenses.

- However it may be possible to setup automated gateways to convert between a 3rd-party currency and L$ or any other arbitary currency - something like a cross between paypal and the current popups for when one's L$ balance is too low

- Alternative servers (or local grids) with accounts linked to LL servers might be able to purchase L$ to be issued to its members for use on LL servers.

- Privately-owned grids could be responsible for issuing their own licenses, to throttle their clients' use of those private resources.

- allow for secure transactions other than in L$ via PayPal, credit cards, etc.

- It is possible to maintain a single payment mechanism across multiple grids in a decentralized way using a social-network credit, or Hawala. Actual payment systems using this method exist (for example Ripple) and allow transparent convertibility between different currencies, some of them also allow transfers from and to Paypal and other online payment systems.

- Replace currency with cryptographically signed documents with the electronic equivalent of an IOU?

Assets

- It is possible to implement asset storage in a completely distributed way

- Assets need not be stored in fixed locations (such as in the 'home' grid of the creator, for example)

- A completely Peer-to-Peer (P2P) storage protocol is possible

- It's worth pointing out that P2P is the only topology that scales with the number of participants in the world. Other topologies have useful properties and get us part of the way (eg. private distributed authoritative world servers fronted by non-authoritative commercial big-iron caches), but ultimately P2P has no real challanger.

- To be successful it would have to retain many of the properties of the current 'fixed' storage schemes

- Available: Since the individual physical storage providers in a P2P network can't be trusted, a great deal of redundancy would be required to make it unlikely an asset would ever become unavailable

- Secure: Assets would need to be encrypted using strong PKI where appropriate (for example, so you can be sure nobody but the primary key holder (/ capability holder) can view your script source or edit your object). For the same reason, no asset should be stored in-whole at any single physical location (hence requiring the collusion of a large number of parties to even assemble the encrypted form of an asset in order to mount a cryptographic attack, unless the 'directory' of storage addresses for the asset has also been compromised). Obviously this wouldn't apply to the 'public' form of an asset (e.g. script bytecode, pre-optimized object mesh etc.).

- Persistent: Some mechanism to control the lifetime of assets may be needed

- Given the pace of storage technology progress, it may be possible to just keep every asset ever created (ride the wave of progress)

- If limited asset lifetimes are needed, some kind of distributed garbage-collection algorithm could be employed

- What would happen to assets that remain accessible but never 'accessed' for long periods of time? (We don't want the 'virtual data archaeologists' who research the 22nd century in one thousand years from now to keep running into cases of assets that are no longer available just because a long time has passed without 'access'!)

- Will it be possible to delete assets/accounts someday?

- This may be a hard problem to solve properly now, but just designing an architecture with it in mind and then implementing something similar to the fixed scheme utilized now would leave the possibility open of implementing the more general case in the future.

- Lets avoid a repeat of the mistake made in the 'design' of the www, where public pages are often stored on servers owned/leased by their creators and routinely lost for the future (save the efforts of the way-back-machine etc).

- To be successful it would have to retain many of the properties of the current 'fixed' storage schemes

- Assets should support being signed (and notarized)

- Perhaps they should even allow arbitrary meta-data to be attached to them by their creators (or anyone with perms) and accessed via scripts

- Assets should be raw data.

- Asset type, permissions, creator, watermark, etc would be meta-data.

- Any kind of meta-data could be added and used or ignored as needed.

- How will a region be able to accept an alien object from another system's inventory, or an inventory to accept an object from a foreign region?

- Would an upload fee make sense for transferring objects to different grids? Who will pay it? The creator? The first one who bought it and travels to the other grid?

- If so, how would that fee be determined for a completed object?

- Would some grids (or domains if you'd prefer) be able to reject items from other domains on a permission basis?

Protocols and interaction patterns

I've added this bucket to discuss the related topics of protocols and interaction patterns.

We have several building blocks proposed from Linden Labs, in the form of [certified http], REST, and [capabilties].

We do not have much described from Linden in two other major areas, namely how to choreograph calls into sets of calls which achieve an end (interaction patterns) and how to manage situations where we wish to setup an ongoing stream of interaction between multiple components. This last case is especially cogent when we discuss how region servers interact to provide the illusion of seamlass land.

Zha Ewry - 9/25/07

Capabilities

The capabilities of a domain, region or client do not have to mesh perfectly, but we should set about making sure it doesn't choke.

3D Web

3D Web simply put is forcing the multidimensional flat data of the internet into a single continuous three dimensional space. In the past it has largely failed because of a lack of hardware and attainable social pressure. SL has the potential to fill the 3D Web niche if it can at the region & domain level be integrated with traditional web applications.

Limited Capability Clients

When a client such as a cellphone or any limited capability client (LCC) connects, many of the more advanced features could be turned off for them but if there were a way to automate some of them, then the LCC could still participate.

There are a number of features that would enable supporting LCCs.

- Scriptable capability deficiency handler

- This could allow the world to automate features not supported by the client so the client could still participate.

- A cellphone user goes to a show to listen to the audio stream, when they connect to the audio stream the region automatically seats them in a vacant chair.

- This could allow the world to automate features not supported by the client so the client could still participate.

- Scriptable Auto-navigation waypoint system (choices would be present as menus, etc)

- This could allow a user who can't see the world navigate it by menu.

- Embedded web pages from prims made the primary focus so they can broken out and interacted with.

There is no requirement stated here that they be scripted in LSL. I don't think LSL would necessarily be appropriate -- Strife Onizuka 17:07, 27 September 2007 (PDT)

- LCCs now have their own use case section, Use_Cases#Limited Capability Clients.

Capabilities: The path to 3D Web

With a framework in place to negotiate and script deficiencies in supported features, the ability to support an LCC that is in fact a web browser becomes possible. More importantly, the transition from Web to full 3D Web can be transitioned one feature at a time.

A website could be described as something like an art gallery. Information is organized into rooms and it typically line the walls. The passages between the rooms allow traversal from one page to another. This analogy is nowhere from perfect.

With a dynamic capabilities system, the client could drop down to dumb mode and browse the art gallery like a webpage or it could explore it as a 3D space with all the features turned on.

- One interesting question for a migration/incremental move between static 3d content and a fully immersive environment, is when you can, and how you can, bring the space from static traditional content to a space where multiple avatars can interact. When we look at this space, it is interesting to notice that we're really doing several things at once in a space like SecondLife. There is the presentation of 3d content, there is the melding of multiple presences with the 3d content and the presentation of that content, the shared space to the viewers interacting in the space. Note that a 3d web, that is to say our current web with 3d content, would be a very static place, there isn't much notion of shared presence in the 2d web. -- Zha

- If the Capabilities Framework is designed properly, it can allow for static 3d spaces with no user interaction. They won't be much fun, but thats another issue altogether. IMHO 3D Web by itself is doomed but when you tie it into the SL grid it has a chance of not being a total flop. LL is in an interesting position, they could be the next Network Solutions but for the 3D Web. -- Strife Onizuka 23:49, 27 September 2007 (PDT)

ANALYSIS: Region Subdivision as a scaling method

- A recent AWGroupies discussion examined the issue of sim and region scalability in the direction of increasing the processing power available to regions. One mechanism for achieving scalability that was proposed was region subdivision of statically-bounded region spaces. This section first provides a rough picture of conditions under such scaling, and then examines the problems that exist with that approach which impose inherent limits to its scalability.

Crowd conditions under projected scaling -- a few numbers

- The scalability for events target for the normal use case has an event scaling factor requirement of 200 (20,000/100) --- this is the factor by which the number of people who wish to attend a maxed-out-sim event today will increase under Zero's total-population projections. (The massively higher viewer numbers possible for the SecondlifeTube use cases are not examined numerically for now, except in discussing event headroom.) Event scalability projections were calculated in the discussion on Project Motivation - Scaling for events.

- For this event scaling factor requirement of 200, the region subdivision method subdivides today's 65,536 m^2 of land per region into subregions of (65,536/200) = 327.68 m^2 under a policy of equal-size subdivision, which is a square of 18.1m per side and 25.6m diagonal. Each subdivision then holds 100 people, each with a personal space of 3.28 m^2 (a square of 1.81m per side). Thus, this represents a lighter packing density than normal crowd packing in real life (RL), which approaches 1 person per m^2, and hence represents a less onerous scaling than would a true crowd simulation. Visually then, this packing density would provide a normal and untroubling experience for any person used to popular standup venues in RL.

- Assuming simple rectangular NS/EW subdivision, the maximum rate of sim handover would be experienced every 18.1m of linear travel when aligned with a NS/EW grid, and the minimum rate when travelling diagonally every 25.6m. Determining the rate of handovers is hard without performing a statistical study of typical avatar movement patterns, so this is not attempted here. However, under rectangular spacing, a square subregion containing 100 people has 10 of them along each side bordering on the adjacent subregion, which provides a lower bound on handover for near-zero travel and therefore bears examination.

- At each such boundary, any outbound movement by anyone from within their subregion causes handover (this is reduced by hysteresis, see below), and what's more it occurs in both directions across a boundary concurrently (by symmetry with the adjacent subregion), within any cross-section of an event crowd.

- Therefore the maximum rate of handovers at a single boundary without any contribution from deeper within a subregion is somewhere between zero (nobody moving) and 2*10 = 20 (10 on each side of a boundary moving outbound). Since there are 4 adjacent subregions per subregion, this gives us a maximum of 20*4 = 80 possible handovers at the boundaries of any given subregion, under conditions of zero hysteresis, nobody coming out from deeper within their subregion, nobody getting closer than 1.8m to anyone else, and any movement whatsoever that is greater than zero distance (since hysteresis == 0). The average would be half of this or 40 handovers, since each of those 10 might be moving deeper into their own subregion rather than outbound.

- Note that this is not a handover rate as such (since movement rates are unknown), but a bounding coefficient governing maximum handover rates for minimum travel. It doesn't establish an actual target for handover rate handling, but just gives us some idea of the magnitudes involved --- in other words, it shows that discussing thousands of handovers per second per subregion is not relevant, but a few tens of handovers might be. It offers an initial suggestion of possible viability.

Potential problems with Region Subdivision

The above scenario gives some idea of operational conditions under the normal (ie. current) use case, which are complicated by the following observations:

Max-scaling conditions apply under very small scaling (density-based subdivision is required)

- First of all, it needs to be borne in mind that the above conditions do not apply only to the end projection of a 2bn user population, because crowds at events almost always congregate on the primary focus of attention, for example at the stage of a live music event. This would apply right now if regions and clients were scalable, because the top musicians already max out their sims for well-advertised concerts, even if they perform more than once a day. (Check out any [Komuso Tokugawa] concert, for example.) Live music is of course not the only type of event that maxes out sims in SL today, even without considering one-off special events like the recent SL4B celebration that attracted many thousands concurrently but was not able to handle them.

- Because (i) popular events easily exceed the 100-av subregion regularly today, (ii) will do so even more with each passing day, and (iii) audiences draw together around an attraction, the described subdivision policy of equal-size subdivision is not viable. Instead, we require an equal-density subdivision policy, because without it we will not be able to scale events in the immediate future, let alone far ahead. The equal-size policy was described only to provide a rough idea of the numbers that govern handover densities, but the actual policy to be employed must be based on local participant density or it will not accomplish its goal, because otherwise subregions at the event focus would be massively oversubscribed while outer ones would be empty.

- This is clearly more complex from a design and implementation standpoint, but it is inevitable under the region subdivision method otherwise the premise of limiting subregions to handling a maximum of 100 avs is exceeded very early on in the population growth, or even right now.

- An alternative to equal-density subdivision that might address the problem is modulus subdivision (the region is sliced into sections each containing rather less than the maximum number a typical CPU can handle, plus one more section to carry the remainder). Geometric land partitioning however is not an option, because agent density is highly non-uniform.

- In other words, we could not design for equal-size subdivision initially and then evolve to equal-density subdivision, but instead we would require a density-based subdivision right from the start: equal-size subdivision does not accomplish the goal, which is to handle loading from high avatar densities. And that introduces numerous difficulties, examined later.

Hard ceiling on scalability (non-existence of scalability headroom)

- The 18.1m per side square of the simple example subregion (which is not actually viable because equal-density subdivision would be needed as above, but is nevertheless still useful for analysis) seems at first glance to be a viable extent for a subregion. Unfortunately, this would not be the minimum size of a subregion under the figures of our Project_Motivation. The reasons why subregions would have to be far smaller than 18.1m include the following:

- Prim count expectations will rise, inevitably. This includes land-placed prims in the event region, but these are not the primary concern, since the biggest prim-related loading is generated by avatar attachments in all event-scaling scenarios. While it is hoped that sculpties will reduce the need for very high-prim attachments, a knowledge of people suggests that the opposite will occur, and that ever-more detailed attachments will increase instead of, in parallel with, or on top of sculptie-based designs. Region loading from prims will therefore be higher than forecasts based only on population growth.

- Scripting load is highly likely to increase, for three reasons:

- The number of scripts never decreases, and if the ceiling on land prim counts is raised then script numbers will rise too. More importantly however, scripts numbers in attachments have no user-explicit ceiling, and will be expected to grow not only alongside normal population growth but also because people will want facilities for crowd management.

- As the population grows, new residents experience the pleasure of LSL programming, so the volume of available scripted objects always heads upwards. One additional factor that adds to the concurrent scripting load is that as the worldwide number of free or cheap scripts increases, the initial expense barrier to event participants running quality scripted products falls away. Since exponential growth of the SL population creates an ever-larger percentage of newcomers compared to well-funded oldies, this effect results in an ever more rapid takeup of scripted objects by residents. The onset of usage of scripted attachments is therefore no longer deferred while they earn some money, but becomes ever more immediate.

- Mono is coming. This will speed up scripts markedly, and means that more scripts will be runnable in a region for any fixed amount of CPU resource, and therefore inevitably also means that more scripts will be run. It also means that more efficient scripts will access backend assets more rapidly, which compounds loading still further.

- Adding these 3 points together suggests that region loading from scripts will therefore be higher than forecasts based only on population growth.

- The SecondlifeTube use cases are highly likely to become massively popular, potentially becoming the "new TV" of the second decade of this millennium. The impact of these uses cases on server-side scalability is nothing short of ultra scary, and since the client-side changes for these use cases are quite trivial, the pressure will be on for massive server-side upscaling for events.

- Factoring in the effect of more prims, more scripts, and a future which includes SecondlifeTube-type clients, immediately indicates that the 18x18m minimum-size subregions that we thought might be sufficient for scalability by region subdivision are wholly inappropriate. Indeed, the analysis is out by some orders of magnitude (log10(~millions/20,000)), whereas not even a single order of magnitude improvement is available since 18m/10 is roughly the size of an avatar, and other factors add loading too.

- In case the impact of this is not immediately obvious: subregions would have to be smaller than an avatar to scale fully by this method. In other words, the method is completely non-scalable to many use cases of high interest, because region handover makes no sense when regions are smaller than avatars.

- Or, as another way of putting it, the region subdivision proposal contains an immoveable ceiling or cap on region scalability. What little headroom exists for possible reduction of subregions below 18m per side can never go below the size of an avatar because of handover, so the ceiling cannot be raised further. Simple use cases that are expected to be popular require scalability beyond that.

Adjacent region view non-scalability

- When a region is subdivided into a large number of small subregions, the 3D view from any given position will require object data to be gathered from all the region servers whose land or objects are visible within the observer's field of view, or possibly more for speculative caching. This process entails a lot of distributed activity. While that is not in itself a problem in the first instance, it gets progressively worse as the subregions become smaller and smaller, since the number then involved in almost any view will then grow larger and larger.

- What's more, the intended goal of letting other machines assist by working on their own subregion is lost, because the physical proximity of subregions to other subregions forces additional object viewing traffic on them. The separation of domains which gives tiling its good performance is lost when those domains are so close that they become local. This is a fundamental design flaw in Region Subdivision.

- The effect is pathological. As subregion size is reduced, the interconnection topology tends towards total connectivity.

- In other words, region object loading which used to be primarily local now becomes global as regions are subdivided, because 3D viewing distances are not reduced in proportion. This appears to be a recipe for large additional machine loading which wastes CPU and network resources by coupling region land extent to the (desired) distribution of computing.

Increasing grid workload from subdivision (non-scalable internal workload)

- Region subdivision always increases the overall workload. This stems from the simple observation that, for any given area, area subdivision adds new interaction points within that area.

- As an example, consider a square of 100 units per side and containing a uniform density of objects (or other reasons for boundary interactions to occur), and assume that each unit of distance also generates a unit of boundary interaction workload:

- S/1: The original 100x100 square then features (4 * 100) = 400 units of workload, ie. 100 per side.

- S/2: Splitting the square down the middle results in 2*((2 * 100) + (2 * 50)) = 600 units of workload.

- S/4: Splitting each half into half again results in 4*((2 * 50) + (2 * 50)) = 800 units of workload.

- S/8: Splitting each quarter into halves again results in 8*((2 * 50) + (2 * 25)) = 1200 units of workload.

- S/16: More directly, dividing the original square into 16 results in 16*(4 * 100/(16/4)) = 1600 units of workload.

- And so on. For every 4-way split, the boundary interaction workload doubles, ie. exponential in 2^(2N).

- This then is a design for non-scalability, since in the limit it tends towards infinite internal workload as the subdivision tends towards zero size, and since the trend is exponential, it is the exact opposite of a scalable trend.

Hysteresis zone size shrinks to unusable

- Real systems which employ the concept of handover at region boundaries do not usually trigger handover at the actual boundary lines, because this would generate pathological behaviour for agents sitting exactly on the boundary, moving along the boundary line, or making infinitessimal movements while at the boundary. All of these cases can result in 'handover thrashing, ie. continuous switching back and forth between regions, potentially at very high rates.

- To avoid this, handover is usually tamed by placing handover bands that add [hysteresis] to handover at boundaries. This can be done in many ways, but the general goal is to introduce delay into the handover process (this ties into normal control theory for damping uncontrolled behaviour through negative feedback with a delay in the feedback loop). The handover band does not necessarily have a physical extent (it's more of a time parameter), but it can be associated with a physical extent if the velocity of an agent perpendicular to the boundary is considered.

- As the conceptual handover band shrinks in size, hysteresis is reduced, and handover behaviour draws closer to the pathologcal condition that it was designed to avoid.

- When regions are repeatedly subdivided as in this proposal, retaining existing sim behaviour will require reducing the size of handover bands correspondingly, otherwise in due course handover bands will overlap and the current handover design is then no longer viable since the handover process loses its normal 1:1 mapping. If the handover bands are reduced sufficiently, this then leads to handover thrashing.

- In the limit of subdivision, handover cannot work at all without continuous thrashing, although in practice the bag of problems is so full by then (eg. regions smaller than avatars) that it hardly matters.

- In summary, the concept of handover by region adjacency becomes progressively less manageable and usable as subregions shrink in size, and would need to be replaced by some form of non-adjacent region handover in order to retain the important principle of hysteresis.

Adjacency-based power contribution is flawed

- Region Subdivision is an attempt to retain static mapping between land and servers while sharing the workload of highly loaded adjacent regions. In other words, load sharing requires land sharing (through subdivision), in this model. This constraint on load sharing is deeply flawed, as a very simple example illustrates:

- Imagine a grid split down the middle, in which all servers in the right half are 100% idle, and all servers in the left half are 100% busy.

- Now replace the server right in the middle of the left half by one that is running at 80% CPU capacity, that knows that a major event is scheduled in 5 minutes' time, and that is desperate for help to avoid total collapse.

- None of the adjacent servers in the left half can help, as they have no spare capacity. None of the extremely bored servers on the right half can help because their land is not adjacent to that of the ailing server.

- In 5 minutes' time, that server collapses.

- While this is not a real scenario, it illustrates well that the concept of land adjacency is not helpful for power sharing. In real scenarios, exactly the same considerations apply: the ability of a server to accept a share of a region's workload should have nothing at all to do with land adjacency, extent, or location. Land should be a virtual concept, and not tied to the CPU resourcing model.

Dynamic subregioning vs stateless access to virtual regions

- As a little analysis revealed above, some key aspects of Region Subdivision have turned out to require dynamic handling:

- The actual subdivision policy cannot be equal-size subdivision, otherwise workload sharing is not effective and some subregions suffer overload beyond viable limits while others in the partitioning are relatively idle. Instead, a policy of equal-density subdivision is required, and this is a dynamic policy.

- Hysteresis for handover stability requires handover band reduction proportional to subregion shrinkage to retain 1:1 handovers, or else requires that the handover mechanism be replaced entirely by one that is not based on subregion adjacency. Such handling becomes highly dynamic.

- As subregions reduce in size to cope with increased region load, each contributing CPU is required to handle object fanout for more and more viewers because 3D views do not shrink in step with subregion reduction. Consequently subregion shrinkage tends towards serving the needs of everyone in the locality, which is the same goal that Virtualization of regions achieves without any handover overhead.

- When the design of region mechanisms starts to embrace changing sizes and adaptive handover systems, it is no longer a simple statically tiled grid architecture. Instead, it is halfway to a dynamic architecture in which region parameters are entirely virtualized, but instead of benefitting from that new freedom, it retains the disadvantages of the old design and introduces many new problems, as highlighted in the analysis.

- This tends to suggest that, if the static grid functionality is being redesigned to have dynamic features, then those dynamic features should operate independently of those static constraints, in order not to introduce new disadvantages by distorting the old model.

- The latter is the approach suggested in Virtualization of regions, which works alongside the old static resource mapping, merely offering any unused local resources to all other participants in a parallel dynamic infrastructure.

- It is worth noting that virtualizing regions inherently results in stateless communication with the servers that perform operations on virtual regions, and stateless communication is a central tennet of REST. The principle of virtualizing regions is therefore inherently consistent with massively scalable HTTP-based loadbalanced access to virtual resources, of which a virtual region is an archetypal case.

Scalability vs scaling

- When the proposal to use Region Subdivision was described and discussed in the AWGroupies discussion, the impact of the scary numbers of Project_Motivation on the proposal was not considered, and was in fact dismissed, on the grounds that scaling would occur in an evolutionary manner and therefore only a smaller number would initially be relevant. This is flawed reasoning, because it confuses scalability with degree of scaling.

- If the goal is to design a system that can handle (say) a population of N users, then in general there will be any number of different designs that can fulfil that requirement. These designs will typically differ in their scalability: one may support only N users and no more, one may be able to handle some small multiple of N, and another may be able to support a vastly larger population. However, because they all fulfil the short term goal of supporting N users, these differences are not apparent if the only design case that has been considered is the scaling to N. If a system implements a design that is viable only up to N users, then if that system needs to be scaled up further, the only way forward will entail a redesign, and if that new design is not evolutionary from the earlier one then this will also entail a reimplementation. This is clearly not satisfactory if the envisaged population is hugely greater than N right from the start, even if N is the initial target.

- In respect of the Region Subdivision proposal then, it is not sound scalability analysis to ignore the scary numbers of Project_Motivation just because some smaller number is envisaged as a short term scaling target (a specific implementation). All candidate designs need to be assessed against the scary numbers to ensure that they remain viable at the projected limits of scalability, regardless of the magnitude of short term physical scaling targets. Without that, gradual evolution is not assured.

Illusion of scalability (abstracting resources into clouds)

- First, a general observation on allegedly designing for scalability without actually doing so (also known as designing with clouds):

- You cannot put a scalable, massively parallelized HTTP access mechanism on the front of a non-scalable resource and magically claim that you have a scalable resource. All you really have is a scalable access mechanism. If the resource is non-scalable, then the scalable access mechanism is wasted. This means that if we put the resource in a cloud labelled "Scalability to be left to implementors" then we have not actually achieved resource scalability. We have not even produced a design that makes the resource scalable, let alone actually achieved scaling. We *know* that stateless HTTP front ends and load balancers give us a scalable access mechanism --- it's been normal industry practice for 10-15 years now (the author scaled a national ISP from 64k users to 2+ million over 6 years using that approach, and it was severely limited in scope). That's not the hard task that the AWG is tackling, it's the easy part of the hard task.

- So, if your project goal is to design scalable resources, you have to do exactly that. Anything else is self-delusion.

- Now to place this in the current context: it has been suggested that we might abstract scalability of regions by placing the internal design of regions inside a design cloud, and to claim scalability because we have placed a scalable HTTP-based access method in front of that cloud. That suggestion is not designing for region scalability, it is brushing the whole issue under the carpet. It does not achieve scalability of regions in the current SL, nor in the projected SL, neither in design nor in implementation. It is simply ignoring the issue of region scalability entirely.

- Given that scalability for events is needed extremely badly right now, and that the nil scalability for events of the current grid has been causing intense dissatisfaction among event participants for far longer than the limits along any other dimension of scalability (well over 3 years), ignoring this element of scalability while pretending that it is addressed is not a attitude of responsibility towards SL residents. It should not be even considered. (This paragraph is not technical, but is relevant in the sense that design engineering and the work of the AWG ultimately has a social purpose.)

- We are not designing for a future SL in which 99.5% to 99.995% of the residents who wish to attend a given event have to stay at home --- see Talk:Project_Motivation. Region scalability for events is a primary goal, as well as an urgent requirement.

Summary of observations

- The Region Subdivision approach suffers from several difficulties and restrictions which will result in high overheads if scaled even to the lower reaches of the numbers offered in Project_Motivation, and high overheads generally reduce actual ability to scale to far less than predicted figures. Even more worrying though is that the proposed approach contains an inherent ceiling on achievable scalability (let alone actual scaling) for predictably popular use cases which are already implemented in other systems.

- Given that such difficulties are already identified at this early design stage, Region Subdivision would be a poor design choice for a new architecture.

"This concludes the results of the Norwegion Jury." Not quite nil points, but close.

ANALYSIS: Region Virtualization as a scaling method

- (this is a stub ... details to follow after work on Region Subdivision)

- For now, feel free to identify problems suggested by the early notes in Virtualization of regions.

<<< add your own detailed analyses here as further first-level sections >>>

ANALYSIS: Per-resident subdivision of the Grid as a scaling method

Observation:

- Any SL scalability study aims at ramping up the processing and network capacity of a given location in Second Life with the number of avatars present at this location in the most linear fashion attainable: the logical solution is to tie this performance to the avatars and not the land. Because any given object or avatar can only be at one place at any given time, it also makes sense that avatars and objects belonging to a given player be simulated by the same server. Because each resident's grid usage is roughly comparable to another's, distributing the load per resident also makes sense. Because the load induced by a given resident is generally not expected to jump up and down widely in the short term, even an initially static distribution of server performance across players is a good start. Because a resident's performance usage in the grid is highly dependent on the number of inworld objects they possess, ramping up the performance alloted to a given player according to their land possession / prim allotment is a good solution, and is also fair considering landowners pay for the SL servers in the first place.

Principle:

- Instead of subdivising the simulation load geographically among static regions, subdivise this load across the owners of the avatars and objects. Your avatar and all your prims, the physics and scripts of your objects, etc. are simulated and served by your one allocated simulator gridwide, regardless of where they are in the Grid. An avatar UUID is paired to a simulator, much like a web domain name is tied to an IP address through DNS.

- A client's viewer or an object is then understood as a presence, encoded into the ownerkey / avatar UUID, and simulators only have to keep track of this presence on your parcel, which is a lot less work than receiving data representing the session of the visiting avatar, their attachments, states of the running scripts inside, etc. When your avatar moves and comes into view of a new parcel, your viewer gets the ID of the landowner, which in turn indicates which simulator (the "local simulator") runs that person's content. Your simulator connects to this server, provided this parcel is accessible for you. Then this "local" simulator provides your own simulator with a presence list, which is nothing more than a list of the ownerkeys of content or avatars that are present on this parcel. Your viewer opens a connection for each ownerkey/simulator on this list, and they then send you relevant information about the content they each run, that is in view for you. This distributes the load between multiple simulators. Your viewer simply aggregates all this information incoming from multiple sources in a single 3D renderview.

- Each resident UID becomes tied, possibly dynamically, to a share of a specific server's processing and network capacity. Basically, the sqm of SL land becomes the unit for measuring raw grid simulating power, alloted on a per-person basis.

- A mechanism for coupling UUIDs of land/content/avatar owners with their simulator's address is required, and replaces the current coupling of region and sim address.

- Such a mechanism (a form of DNS) could include some load-balancing method. Having multiple simulators running a given resident's content could help scalability, too.

- This multiplies the number of concurrent simulators serving the local content (especially avatars and attachments of the many visitors), managing physics, running scripts, etc. in crowded locations, distributing much of the supplementary load induced by the crowd's presence.

- This also makes all of one's content gridwide easily accessible from one server, wherever it is inworld, just like the inventory allows to do with your non-rezzed content.

- Object and avatar entry is controlled by the local simulator reporting or not reporting the presence of objects and avatars entering from the outside.

- This means people and objects can move anywhere freely, without restraint, but they can be viewed as "being there" and interact with things and people present there only if the local land owner allows it. No more bumping into red lines, but in exchange you don't see any of the people and content that are on the land you're not allowed in, and no one there can see you or any of your objects, nor interact with you. That's the "ghost snub" way of access restriction, in a sense.

- Basically, one can fly anywhere uninterrupted in the Grid, including empty ocean, and the landmass and sea look seamless, but parcels that are not accessible appear entirely void of any avatar or content, your viewer not even receiving any data for them. This is an appreciable improvement in both privacy and travel ability.

- The land topology, parcel information, wind, sun, clouds, etc. is seperated from the "regular job" of simulating content and can be transferred to yet another cluster of servers/databases.

- I figure the land textures would either be served by this "Land domain" ; alternatively, if serving land textures is kept as a job for the sim, viewers would display the default "grey" texture on the ground while it attempts to load it.

- It would allow per-parcel ground texture customisation.

- It means the landmass can vastly outgrow the "inhabited" zones of SL: land with no content or owner can be added independantly, coasts and plains and oceans can be added transparently between the islands and continents. Because such a content is extremely static, it can be neatly cached for long periods of time, maybe even downloaded as a standalone addition.

- However, no owner for the land means no simulation, so you cannot meet people if you're there, you're lost in your own empty alternate dimension and not connected to the grid except for fetching heightmap, sun, wind and clouds information.

- This allows for non-uniform server types for simulators, that can be just evaluated by total sqm capacity, and whose "slices" of such capacity get allocated among SL content owners according to their gridwide total land possessions.

- Employing more than one full server worth of simulation capacity would require a mechanism for balancing the load between the same-owner simulators.

- Such a mechanism already exists: geographic proximity.

- A resident's parcels could be joined across different regions, fusing prim capacity and total visitor capacity gridwide.

- They would only fuse up to a full server worth.

- Right now a single server (machine) runs four standard regions, a small but appreciable improvement potential. Also, if a sim can be occasionnally transferred from one server to another, and considering the possible non-uniformity of servers mentionned above, any sufficiently big land acquisition could get your personal sim transferred to a bigger server that would fit better. So the "full server" limit could scale, too. A dynamic mechanism for moving the "slabs of sqm" to properly sized servers would allow some load-balancing in advance.

- How would a viewer know which of multiple servers running a single person's land or content (in the cass of over a full server's worth of sqm) to connect to ?

- One solution is that each of the multiple servers run all the land and content (memory overhead ?) and viewers that connect are distributed among them (amounts to sharding). Another is to have a mechanism that forwards a viewer from one of those sims to the relevant one based on geographic proximity (preferred).

- This rids us of the region crossing lag, since the physics of your avatar stay tied to your own simulator which does not change for the whole session.