Sculpted Prims: Technical Explanation

| Help Portal: |

Avatar | Bug Fixes | Communication | Community | Glossary | Land & Sim | Multimedia | Navigation | Object | Video Tutorials | Viewer | Wiki | Misc |

Introduction

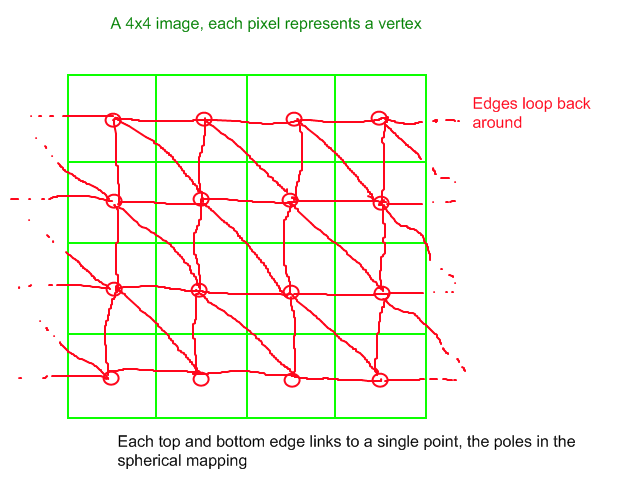

Sculpted prims are three dimensional meshes created from textures. Each texture is a mapping of vertex positions, where at full resolution each pixel would be one vertex, this can be less due to sampling (read below how the Second Life viewer treats your data). Each row of pixels (vertices) links back to itself, and for every block of four pixels two triangles are formed. At the top and bottom the vertices link to their respective pole.

Pixel Format

The alpha channel (if any) in position maps is currently unused, so we have 24 bits per pixel giving us 8 bits per color channel, or values from 0-255. Each color channel represents an axis in 3D space, where red, green, and blue map to X, Y, and Z respectively. If you're mapping from other software, keep in mind that not all 3D software will name their axes the same as SL does. If you have a different default orientation in SL than in your designer, compare the axes and swap where appropriate.

The color values map to an offset from the origin of the object <0,0,0>, with values less than 128 being negative offsets and values greater than 128 being positive offsets. An image that was entirely <128,128,128> pixels (flat gray) would represent a single dot in space at the origin.

On a sculpted prim that has a size of one meter, the color values from 0-255 map to offsets from -0.5 to 0.496 meters from the center. Combined with the scale vector that all prims in Second Life possess, sculpted prims have the nearly same maximum dimensions as regular procedural prims (9.961 meter diameter). The last 39mm is lost due to the fact that the maximum color value is 255, whereas 256 would be required to generate a positive offset of exactly 0.5.

Texture Mapping

The position map of a sculpted prim also doubles as the UV map, describing how a texture will wrap around the mesh. The image is already an explanation of what vertices correlate to what pixel, which is used to generate UV coordinates for vertices as they are created. This presents a big advantage for texture mapping over procedural prims created in Second Life because you can do all of your texturing in a 3D modeling program such as Maya, and when you export the position map you know the UV coordinates will be exactly preserved in Second Life.

Rendering in Second Life viewer

This analysis is based on 1.16.0(5) source and some tests.

Warning: Textures are stored using lossy JPEG2000 compression. If the texture is sized 64x64 or below the compression artifacts are numerous and very bad, once you take 128x128 they are still there but greatly diminish. Therefore for now 128x128 will give you much better results even though this is a serious memory waste. Note that going beyond 128x128 will give you little benefit so please don't! Update: Lossless JPEG2000 compression is implemented, but does not upload all images correctly. This issue is in JIRA as VWR-2404; please vote for it to support fixing this feature. Update: Lossless compression and retrieval appears to be all working now, as of release candidate 1.20.14 (or a few releases previously).

Important: When we refer to the texture in terms of how the viewer code sees it, the first row (0) is the bottom row of your texture. For the columns they are left to right.

The Second life viewer will at it's highest level of detail render a grid of vertices in the following form (for a sculpted prim with spherical stitching):

- 1 top row of 33 vertices all mapped to a pole

- 31 rows of 33 vertices taken from your texture

- 1 bottom row of 33 vertices all mapped to a pole

For each row the 33rd vertex is the same as the 1st, this stitches the sculpture at the sides. The poles are determined by taking the pixel on width/2 on the first and last row of your texture. (In a 64x64 texture that would be the 33rd column (pixels (32, 0) and (32, 63)) as we start counting rows and columns from 0).

For example, a spherical sculpted prim at the highest level of detail Second Life supports requires a mesh of 32 x 33 vertices. If we have a 64x64 texture, this grid of vertices is sampled from the texture at the points:

(32,0) (32,0) (32,0) ... (32,0) (32,0) (32,0) (0,2) (2,2) (4,2) ... (60,2) (62,2) (0,2) . . . . . . (0,60) (2,60) (4,60) ... (60,60) (62,60) (0,60) (0,62) (2,62) (4,62) ... (60,62) (62,62) (0,62) (32,63) (32,63) (32,63) ... (32,63) (32,63) (32,63)

The other types of sculpted prim stitching (torus, plane, cylinder) work similarly, but without the complication of poles. The torus requires a grid of 32x32 points (at the highest level of detail), which it samples at pixel positions 0, 2, 4, ..., 60, 62 in each direction on the texture. The plane requires a 33x33 grid, which it samples at pixel positions 0, 2, 4, ..., 60, 62, 63 in each direction. The cylinder requires a 32x33 grid, which it samples using the torus scheme in the horizontal direction and the plane scheme in the vertical.

It is recommended to use 64x64 images (32x32 textures are not officially supported for sculpted prim use and may give unexpected results). In theory 32x32 should give you (almost) the same quality but current code will not use your last (top!) row of your texture both as vertices and as the row to get the pole from (for a spherical sculpted prim). It will trigger generation of a pole 1 row early and you'll hence get your 2 last rows of vertices filled with the pole. There are however 33 vertices from the north to the south pole of a spherical sculpted prim, and so we would not expect a 32x32 texture to contain quite enough information to determine the sphere.

The next levels of detail are 17 and 9 positions (for the plane scheme sampling), or 16 and 8 (for the torus scheme sampling) which are taken in similar ways to above but with a sparser sampling. For example, instead of sampling at positions 0, 2, 4, ..., 60, 62, 63 (to get 33 points), it samples at positions 0, 4, 8, ..., 56, 60, 63 (to get 17 points). Make sure the most important pixels are positioned on those positions that are used at every LOD.

The quality of your final result is also determined by the JPEG compression. For organic shapes you may find that lossy compression is sufficient for your needs, otherwise use a 64x64 texture with lossless compression (and check "Use lossless compression" when uploading).

Examples

A pack of sculpture maps was available for download at www.jhurliman.org/download/sculpt-tests.zip sculpt-tests.zip but isn't anymore.

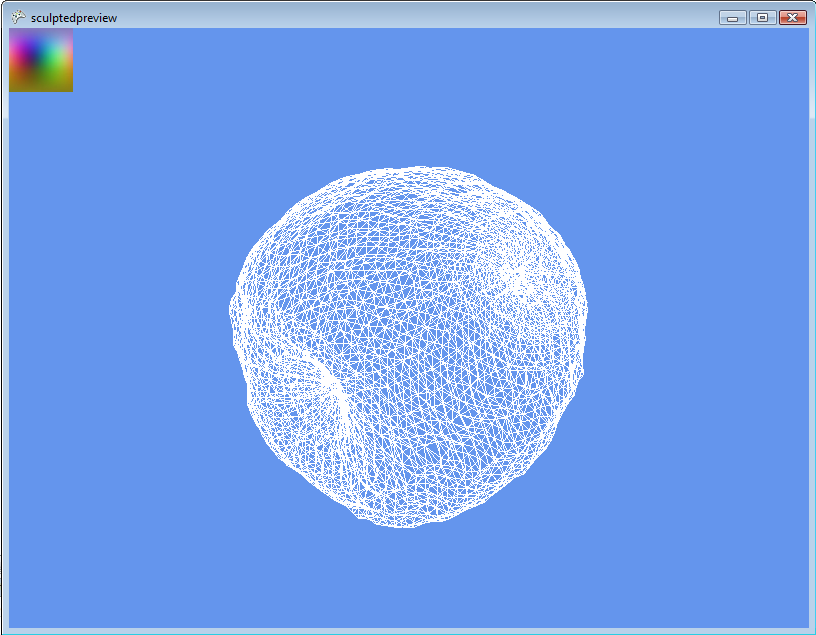

An apple sculpt shown in the sculptedpreview program

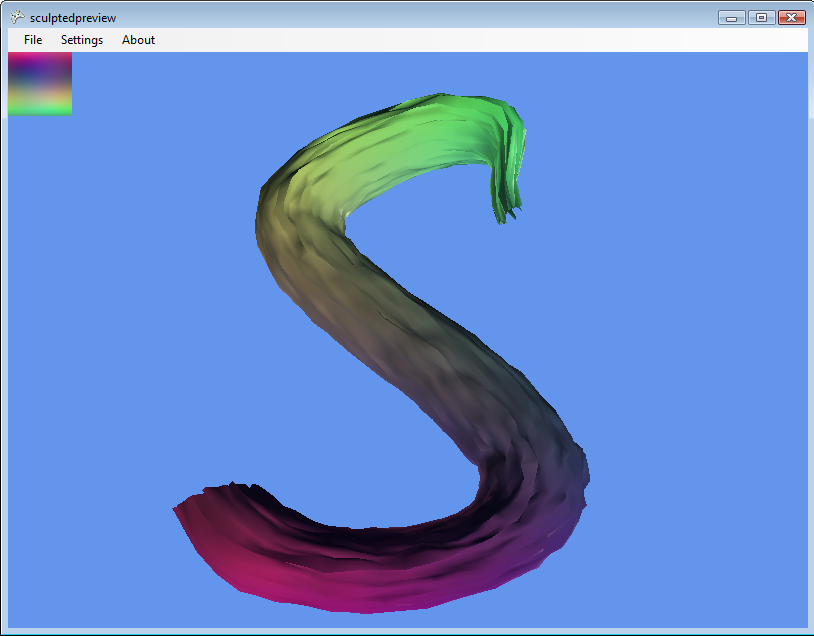

An S-shaped sculpture shown in the latest version of sculptedpreview, with the sculpture map also applied as the texture map

The following C++ program writes to a file "testsculpture.tga". The generated sculpture image can be used to

understand the stitching type, and which pixels are used. View a prim in wireframe mode with this image both

as sculpture as well as texture and change the stitching type.

<lsl>

// Test program for sculptures -- Aleric Inglewood, Feb 2009.

#include <cmath> #include <stdint.h> #include <sys/types.h> #include <cstring> #include <algorithm> #include <fstream>

// TGA header definition.

#pragma pack(push,1) // Pack structure byte aligned

struct tga_header

{

uint8_t id_length; // Image id field length.

uint8_t colour_map_type; // Colour map type.

uint8_t image_type; // Image type.

uint16_t colour_map_index; // First entry index.

uint16_t colour_map_length; // Colour map length.

uint8_t colour_map_entry_size; // Colour map entry size.

uint16_t x_origin; // x origin of image.

uint16_t y_origin; // u origin of image.

uint16_t image_width; // Image width.

uint16_t image_height; // Image height.

uint8_t pixel_depth; // Pixel depth.

uint8_t image_desc; // Image descriptor.

};

int main()

{

int const width = 64;

int const height = 64;

int const bpp = 24;

float const scale = 255.0f;

size_t filesize = sizeof(tga_header) + width * height * bpp / 8;

uint8_t* tga_buf = new uint8_t [filesize];

tga_header tga; std::memset(&tga, 0, sizeof(tga_header)); tga.pixel_depth = bpp; tga.image_width = width; tga.image_height = height; tga.image_type = 2; // Uncompressed. tga.image_desc = 8; // 8 bits per component. std::memcpy(tga_buf, &tga, sizeof(tga_header));

uint8_t* out = tga_buf + sizeof(tga_header);

for (int y = 0; y < height; ++y)

{

for (int x = 0; x < width; ++x)

{

double r, g, b;

r = (double)x / (width - 1);

g = (double)y / (height - 1);

b = 1.0;

// None of these points are taken into account in a sculptie.

if (x % 2 == 1)

b = 0.5;

if (y % 2 == 1)

b = 0.5;

// Except those (depending on the stitching type).

if (x == width - 1)

b = 0.98; // plane.

if (y == height - 1)

b = 0.98; // plane and cylinder.

// In the spherical stitching, these two are the poles.

if (x == 32)

{

if (y == 0 || y == 63)

b = 0.7;

}

// TGA format writes BGR ...

*out++ = (uint8_t)(round(b * scale));

*out++ = (uint8_t)(round(g * scale));

*out++ = (uint8_t)(round(r * scale));

}

}

std::ofstream file;

file.open("testsculpture.tga");

file.write((char*)tga_buf, filesize);

file.close();

delete [] tga_buf;

}

</lsl>